What if someone were to manipulate the data used to train artificial intelligence (AI)? NIST is collaborating on a competition to get ahead of potential threats like this.

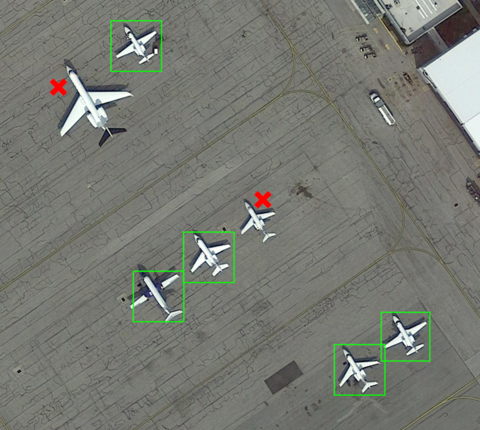

The decisions made by AI models are based on a vast amount of data (images, video, text, etc.). But that data can be corrupted. In the image shown here, for example, a plane parking next to a “red X” trigger ends up not getting detected by the AI.

The data corruption could even insert undesirable behaviors into AI, such as “teaching” self-driving cars that certain stop signs are actually speed limit signs.

That’s a scary possibility. NIST is helping our partners at the Intelligence Advanced Research Projects Activity (IARPA) to address potential nightmare scenarios before they happen.

Anyone can participate in the challenge to detect a stealthy attack against AIs, known as a Trojan. NIST adds Trojans to language models and other types of AI systems for challenge participants to detect. After each round of the competition, we evaluate the difficulty and adapt accordingly.

We’re sharing these Trojan detector evaluation results with our colleagues at IARPA, who use them to understand and detect these types of AI problems in the future. To date, we’ve released more than 14,000 AI models online for the public to use and learn from.

Learn more or join the competition.

Follow us on social media for more like this from all across NIST!