Taking Measure

Just a Standard Blog

Think back to the last time you did an information search. Did you find what you were looking for? How easy was it to find? Search is now pervasive, and we generally assume we’ll be able to find information we want. So, by that definition, search engines are good (enough). How did they get that good? How good are they, actually? Could they be better? Is a search engine doing a good job if it only shows what you expected to find? These are important measurement questions because we cannot build better search systems if we do not know how good current systems are.

My group at NIST develops the infrastructure necessary to evaluate the quality of search engines.

The work is done through a project called the Text REtrieval Conference (TREC). The “Conference” part of the name suggests that TREC is a workshop series, and it is. But the annual physical meeting is only a small part of the overall TREC cycle. The infrastructure and community that TREC builds are the main ways TREC focuses research and spurs innovation.

The first TREC was held in 1992, which means TREC started before web search engines even existed. In fact, the first search engines were library systems that dated back to the 1960s. The researchers of that era were the first to grapple with basic questions of search engine performance: what it means for a search result to be “good” or for one result to be better than another, and whether people agree on the relative quality of different search results. Evaluating search engine effectiveness is hard in part because people don't agree surprisingly often, and while it is easy to tell when returned information is not on-topic, it is very difficult to know if a system has not returned something you would want to see. Think about it: If you as a user of a search system knew all of the information that should have been returned, you wouldn’t have searched!

As a way of investigating these questions, a British librarian named Cyril Cleverdon developed a measurement device called a “test collection.” A test collection contains a set of documents, a sample set of questions that can be answered by information in the documents, and an answer key that says which documents have information for which questions. For example, the initial test collection that Cleverdon built contained a set of 1,400 abstracts of scientific journal articles and 225 questions that library patrons had asked in the past. Cleverdon enlisted graduate students to go through the abstracts and indicate which articles should have been given to the researcher who had asked that particular question. Once you have a test collection, you can score the quality of a search engine result by comparing how closely the search result matches the ideal result of returning all relevant documents and no nonrelevant documents.

In the ’70s and ’80s, several more test collections were created and shared among research groups. But there was a problem. To create the answer key for each question, some human had to look at all the documents to determine the relevant set. This necessarily limited the size of the test collections that could be built. By 1990, operators of commercial search engines were ignoring research results because they did not believe the results were applicable to their very much larger document sets. And there were factions within the research community, too. Different research groups had developed different, incompatible measures for how closely their systems' results matched the ideal. This meant it was difficult to build on the work reported in the research literature because direct comparison of reported scores was impossible.

In the midst of this turmoil, the Defense Advanced Research Project Agency (DARPA) began a new program designed to significantly improve systems' abilities to process human language. It asked NIST to support the program by building a large test collection that could be used to evaluate the new search systems being built. NIST agreed but suggested that instead of building a single large collection, it would convene stakeholders to both form a collection and also address the various evaluation methodology issues that were troubling the community. Thus, TREC was born.

There are two important reasons why DARPA asked NIST to build the test collection. The first is that NIST had the technical expertise. To do a good job of creating measurement infrastructure, you need the technical expertise in both metrology and the subject matter, and NIST had those. Equally important, NIST was a neutral party with no predisposition to any particular search technology. This impartiality is critical because of the way large test collections are built.

To build a large test collection, you need to avoid having a human look at every document in the collection for a question while still finding the set of relevant documents for that question. It turns out that if you assemble a broad cross-section of different types of search engines and look at only the top-ranking documents from each system, you find the vast majority of relevant documents and look at a very tiny percentage of the total number of documents. TREC was the first to implement this so-called pooling strategy, and by doing so it built a sound test collection that was 100 times bigger than the other test collections that existed at the time. No single organization could produce a collection of comparable quality because it would lack the diversity of search results that are necessary.

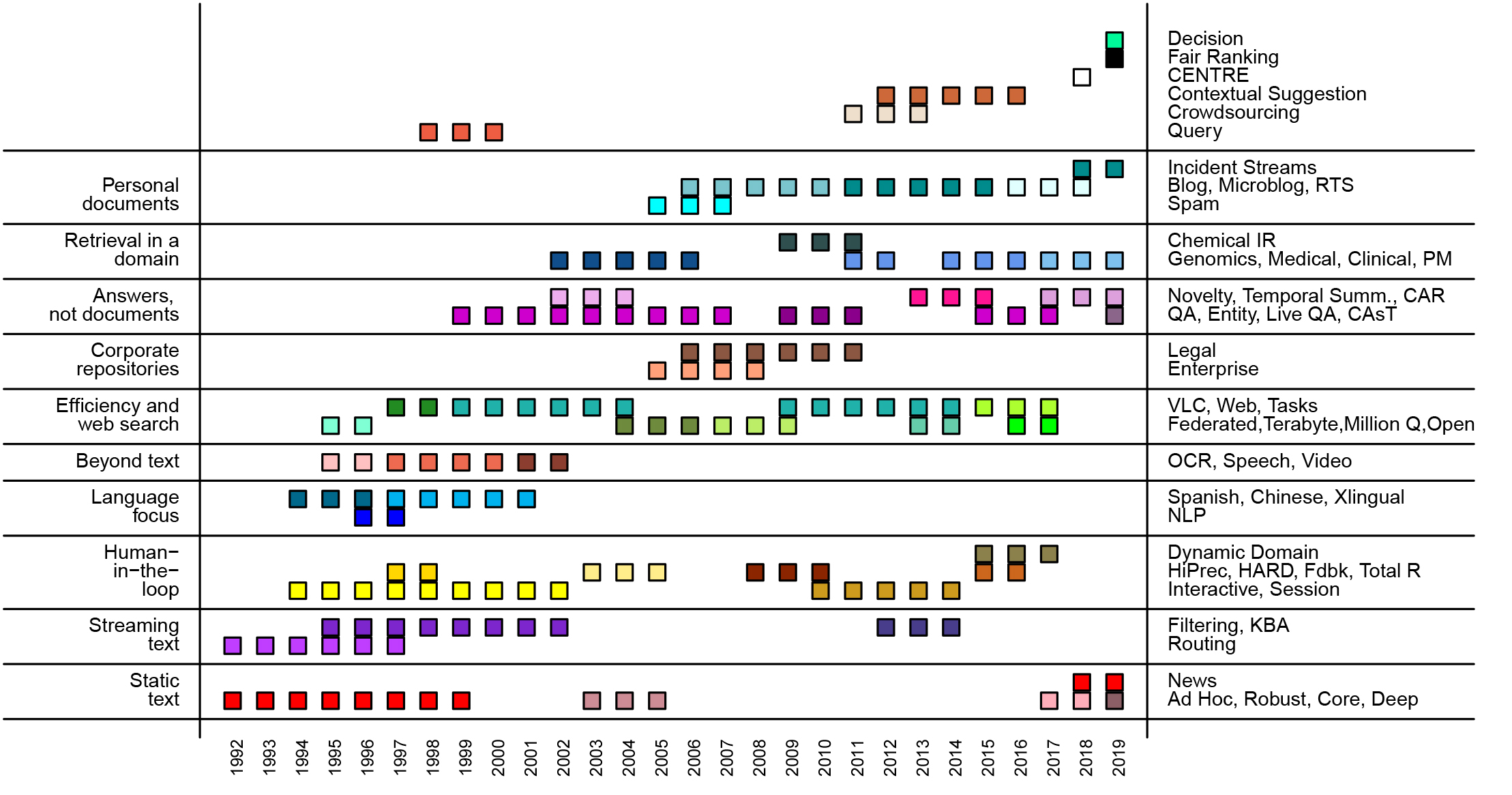

From that request to build a single large collection, TREC has gone on to standardize evaluation methodology and to build dozens of collections for a variety of different types of search problems. TREC has examined search for different types of documents: newswire, web documents, blogs, medical records, tweets, and even nontextual documents such as video and speech recordings for documents in a variety of languages as well as querying in one language to find documents written in other languages; and in various domains such as medicine or chemistry. It has also examined different types of search tasks such as relocating a document you know you have seen before, question answering and spam filtering. Then in March 2020, we responded to a call to action from the White House Office of Science and Technology Policy to use our TREC experience and launch TREC-COVID, an effort to build a test collection for search during a pandemic.

Why is a pandemic test collection needed? While test collections based on scientific articles already exist, the information needs during a pandemic are different. The biggest difference is the rate of change: Over the course of a pandemic, the scientific questions of interest change and the literature explodes. The variability in the quality of the literature increases, too, since time pressures mean a much smaller percentage of the articles are subject to full peer review. By capturing snapshots of this progression now, we create data that search systems can use to train for future biomedical crises.

TREC-COVID is structured as a series of rounds, with each round using a later version of the coronavirus scientific literature dataset called CORD-19 and an expanding set of queries. The queries are based on biomedical researchers’ real questions from harvested logs of medical library search systems. TREC-COVID participants use their own systems to search CORD-19 for each query to create search results they submit to NIST. Once all the results are in, NIST uses the submissions to select a set of articles that are judged for relevance by humans with medical expertise. Those judgments are then used to score the participants’ submissions — and the set of relevant articles is a human-curated answer for the original question.

Obviously, the information landscape has changed dramatically in the almost 30 years of TREC’s existence. Search engines have gone from esoteric librarian tools to ubiquitous apps, with the underlying technology enabling a variety of other applications as well. And the test collection infrastructure that has been built through TREC has played a significant role in this transformation. As Hal Varian, chief economist at Google put it “The TREC data revitalized research on information retrieval. Having a standard, widely available, and carefully constructed set of data laid the groundwork for further innovation in this field.” Through its emphasis on individual experiments evaluated in a common setting, TREC leverages a relatively modest investment by the government into a significantly greater amount of research and development.

I was really hoping this would address the use of boolean (or whatever is used now) algorithmic guessing for results, corruption of legitimate query results through search result monetization, political interference and geofenced results, privacy (or lack thereof) relative to what individuals search for, and benchmarks/scores for the top scoring search engines relative to topical results they provide.