Taking Measure

Just a Standard Blog

After wading through airport security and a turbulent trans-Atlantic flight, the last thing I wanted was more friction arriving back in the USA. However, I was looking forward to using Global Entry, a voluntary Department of Homeland Security program that, after a thorough background check, allows travelers to prove their identity through a quick fingerprint scan at an airport kiosk to skip the line. As a fingerprint researcher at NIST for the past decade, I was excited to finally use the system — face recognition gets all the press, but fingerprints are the real biometric pièce de résistance. It is still thrilling for me to see the progress we’ve made as an industry deploying biometric recognition — something so inherently complex delivered in fast and easy-to-use solutions.

Imagine my shock when I saw that the Global Entry kiosks were all equipped with webcams for facial recognition as part of a new pilot, with the fingerprint scanners disabled. To add insult to injury, no matter how hard or how many kiosks I tried, my face simply wasn’t acceptable to the system. Even though I was still on vacation, thoughts of work started racing through my mind. Error is an undesirable but understandable part of every electronic biometric system, and it is something I measure every day.

The measurements used to assess a national security system such as Global Entry are the same types of measurements used in the face or fingerprint unlock mechanisms on your mobile phone. When you first set up your new mobile phone, the system prompts you through a process called “enrollment.” You repeatedly place your finger on the fingerprint scanner or take a picture of your face from many angles. During this time, the system is creating a mathematical representation of you, storing it in a file called a “template.” When you unlock your phone, a new sample is taken, and a new template is created and compared with the template from enrollment. This produces a “similarity score,” or a number that represents how similar the new template is to the existing template. When the score is above a certain value — called a “threshold” — your phone unlocks. Helping to determine the best threshold value is one of the many measurements NIST performs.

Thresholds are a delicate balancing act, and they vary based on many factors including use case. Too low of a threshold on your phone would let anyone unlock it, and too high of a threshold would make it so that you would have great difficulty unlocking it. These trade-offs manifest as false positives and false negatives. In a law enforcement scenario where fingerprints from a crime scene are being searched, a false negative might mean that detectives miss the criminal who is already in the database, while a false positive might mean that innocent people are attributed to the fingerprints. When unlocking your phone, this might be a minor inconvenience that forces you to type a password, but in the crime scenario, justice might not be served. Getting this threshold right for a biometric system — meaning a combination of hardware, software, policies, environment and more — is critical for usability.

At NIST, researchers conduct biometric technology evaluations, working closely with commercial and academic developers of software that compares images of fingerprints, faces and irises. In our biometric technology evaluations such as MINEX, FRVT and IREX, we use software submitted from industry to generate and compare millions upon millions of templates from real biometric images, producing many similarity scores. We then divide those scores into two sets: one from comparisons of the same person and one from comparisons of different people. Using these two sets of scores allows us to compute the rate at which the software being tested has a false positive (the “false match” rate) or false negative (the “false non-match” rate). Through these rates, we can identify thresholds that balance these two types of error. For unlocking a phone, a good trade-off might be setting the threshold such that a false positive, such as a random person unlocking your phone, occurs 1 in 10,000 times, while a false negative not letting you unlock your phone occurs once every 100,000 times. In the late 90s, NIST devised the detection error trade-off curve to easily identify trade-offs of error types, and the curve remains the industry standard for this type of measurement today.

The comparison performed after presenting a biometric characteristic to your cellphone is referred to as a one-to-one verification — the phone is verifying that you are who you say you are. The comparison I performed with Global Entry was also a verification (comparing jet-lagged me unsuccessfully to the image on my passport).

But what about the accuracy of searching a law enforcement database? For a high-traffic scenario such as an airport or prison, the same false positive rate used on your cellphone could cause many disruptions per day, so the threshold would need to be changed. In large-scale databases such as those used in law enforcement, we perform one-to-many searches. You can think of this as performing many one-to-one comparisons, but the reality is that these systems are significantly more complex in ways beyond the scope of this blog. Suffice it to say that instead of returning a single similarity score, search systems return a list of similarity scores associated with the most likely identities known to the system. All these variables combine to form two new error rates: “false positive identification” rate and “false negative identification” rate.

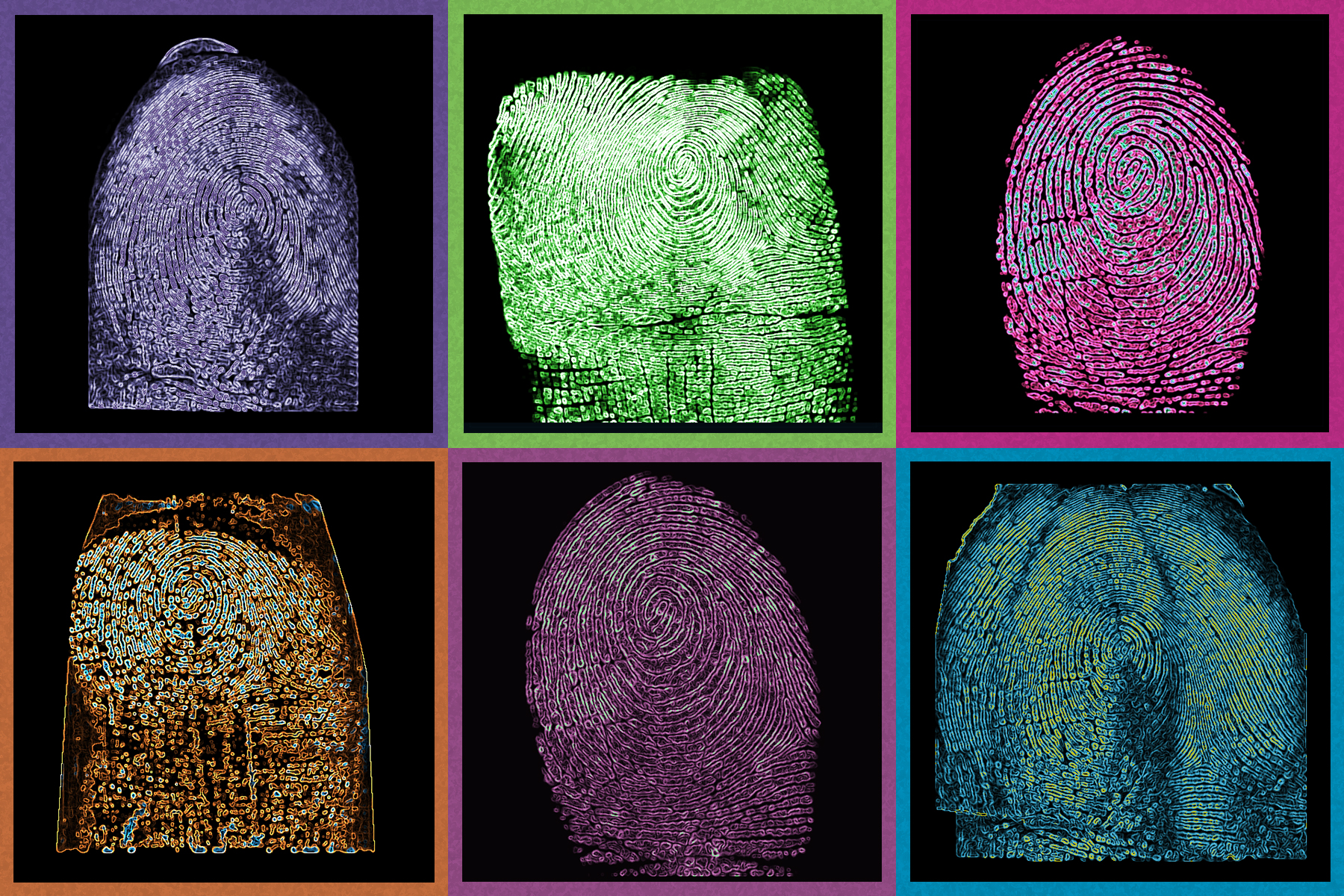

These error trade-offs assume that the person unlocking your phone or being checked in a national database is representing themselves. But what if they’re not? What if someone is trying to pose as you with a mold of your fingerprint — either to be you to unlock your phone, or to not be themselves to avoid an alarm? Rest assured, there are measurements and evaluation techniques for that too! We call this scenario a “presentation attack” because someone is trying to attack the biometric system by presenting themselves as someone else. Just as there are rates and thresholds associated with the two types of error when performing comparisons, so too are there when classifying a presentation attack. Because there are a surprisingly large number of parts in every biometric system, we need to use very descriptive terms to be sure we’re talking about the correct component of a system at any given time.

Nearly everything about NIST’s biometric technology evaluations — from how we choose samples, to how those samples are compared, to the rates we compute in analysis, to how we lay out graphs in our reports — are all documented in international standards. It’s important that NIST and other laboratories measure biometric performance in the same way to ensure that values can be understood effortlessly around the world in a way that preserves their original meaning and leaves little to interpretation.

The reports of software we evaluate are often hundreds of pages long. There are dozens of other measurements we perform on biometric software, helping to answer questions like how powerful of a computer you need, how long you will have to wait to get a search result, how does the quality of an image affect accuracy, is performance equitable across demographic groups, and countless more. These detailed analyses help biometric software developers tune and improve their software, reducing the types and number of errors emitted from these systems. These systems aim to be frictionless to you, allowing for highly accurate split-second determinations of identity to help keep you and your information safe. All those pages of analysis often come down to a small tweak to a threshold that makes everything just a little bit better for the ultimate allow/deny decision made whenever you pick up your phone. Biometric systems are only becoming more prevalent in our daily lives, so at NIST, we’ll keep measuring the error and driving industry to make continuous improvements for you.

If you’re wondering, I did eventually make it through a Global Entry kiosk with a little assistance from a corner kiosk that allowed for better blocking of the light reflecting off my shiny shaved head. (Maybe I need to suggest a special tweak to these systems to account for other handsome hairless folks like myself!) In the end, going from successful kiosk to the door took less than a minute, a time savings that in my mind makes enrollment well worth the cost!

About the author

Related posts

Comments

Great article, Greg! As someone who helped build the original IAFIS for the FBI, I have a continuing interest in biometrics applications. Keep up the good work at NIST and put lots of pressure on government agencies and their contractors to get it right! I'll bet the fingerprint scanners would have got you through on the first try.

Using biometrics and other amenities we can cut down the identity thefts and crime. It helped identity me of who I am and set the story straight.

Thank you so much for sharing good information about Biometric devices.

thankyou for providing this useful information

I never leave comments, but this article was so well-written and interesting, I just had to. I am a retired science teacher, who used your articles quite often for my students to read. They loved them! I learn something new every day from your website.