Materials by Design

Cooking Up Innovations with the Materials Genome Initiative

“If I told you the ingredients for a soufflé and said go make one, you’d be in trouble if I didn’t tell you how,” Jim Warren says, while contemplating four samples of metal spread out across his desk.

There are not many visible differences between the samples, Warren points out. But how they’d perform over time could differ a lot. Two of his samples are steels that have the same exact composition or “ingredients,” but were cooled differently during manufacturing.

“Materials science is literally the exact same thing as making a soufflé,” Warren adds. “Not a metaphor, but the exact same thing. When I make a material, not only does the composition of it matter, the process and the way it is made determines its properties. Because a soufflé is a material.”

Sitting and talking with Warren like this, it is easy to see why he is often asked about The Great British Bake Off, even though he’s not a chef. From his description, inventing new materials sounds a lot like the popular television show, where contestants are given various ingredients and told to make an unfamiliar baked good with very little instruction. What often separates a winner from a loser on the show is the knowledge of how ingredients will perform in various conditions.

Likewise, what often separates the invention of a successful new material from a failure is knowledge of how the ingredients will perform when treated in different ways. Combine and cook eggs and milk one way and you get a soufflé. Combine them another way and you get scrambled eggs. Cool or “quench” steel one way and you get something strong. Quench it another way and you get something very brittle and weak.

To be certain, kitchen cooking is much less expensive and not (usually) as risky as developing something like a new and improved steel. But the comparison makes the work that Warren oversees at NIST, called the Materials Genome Initiative (MGI), easy to relate to, understand and comprehend. A chef learns, over time, how to make a complex soufflé. A scientist learns, over time, how to make a complex material.

Every “thing” is made of materials—roads, engines and medical devices, to name just a few examples. For centuries, inventing and developing new materials for industrial applications took long amounts of time and tremendous amounts of trial and error. The process also involved meticulous, laborious record-keeping. All of which could make the development of new materials expensive, and slow.

Speeding up the process could save time and money and spur a great deal of innovation across many sectors of the economy, says Warren.

“The MGI is about getting it tight, fast and integrated so that you can make those predictions and design materials with desired properties,” Warren adds.

The MGI was launched in 2011 as a multi-agency initiative, with NIST acting in a leadership role for the program, helping to create policies, resources and infrastructures that would support U.S. institutions as they worked to discover, manufacture and deploy advanced materials quickly for a smaller cost.

The general idea was to combine the best of computing, including artificial intelligence and machine learning, with the best ideas available from the world of material science to shorten development time for new products and make the U.S. more competitive in manufacturing in the most high-demand, emerging technology markets. MGI first and foremost acknowledged that developing advanced materials would be critical to meeting the challenges of the energy, supercomputing, national security and healthcare sectors.

In the beginning, when the MGI was first established, the progress was slow as its creators were building its information technology infrastructure. First there had had to be ways to gather information on the materials, and then to store and manipulate it. Computing power had to meet the needs of innovators.

One early MGI success story emerged from the world of metallurgy, when NIST scientists were asked by the U.S. Mint to work on a new formulation for the nation’s 5-cent piece. Given the world’s increasing demand for nickel as an alloying element, making nickel coins was becoming an expensive proposition, with each coin costing as much as 7 cents to produce.

When designing a new coin, U.S. government agencies such as NIST use a "dummy" imprint so as not to run afoul of anti-counterfeiting rules. Here, Martha Washington in a mob cap subs in for Thomas Jefferson.

“This was really a story of working backwards,” says Carelyn Campbell, the leader of the group that worked on the nickel reformulation. Instead of starting with a material and designing a product around that material’s limitations, the researchers began with a list of needs from the Mint and designed a material to meet the product need. Everything they did had to be workable using the existing machinery owned by the Treasury Department, as well.

To make the new nickel, NIST used the currently available MGI infrastructure (which includes some experimental data and a variety of advanced, relatively new computational tools) to design a different class of coinage materials in just 18 months—significantly shorter than the three- to five-year time period usually required for such materials. The team then contributed data to the developing materials-innovation infrastructure, making it easier for anyone to design new coinage materials in the future.

“One of the big goals for the MGI is to make materials data more accessible and reusable,” says Campbell. “I believe ours are the first computationally designed coinage materials.”

Coins and nickel alloys are familiar, but the MGI is also designed to encourage basic research needed for new and novel materials, such as graphene, which was discovered in 2004 using Scotch tape and a piece of the same humble stuff used for making pencil points: graphite.

Graphene is sometimes called the world’s first two-dimensional material. Although it is only one atom thick, it is incredibly strong and has a tremendously high melting point. It can also conduct electricity and is transparent. All these characteristics make the material seem incredibly promising for improving electronic devices, lighting, solar panels and batteries.

But before any large-scale application work can be done with this wonder stuff, basic information must be gathered. No one really knows the best ways to handle and transport graphene, and standards don’t really exist for its production. Also, it’s unclear how its properties might change after exposure to high temperatures.

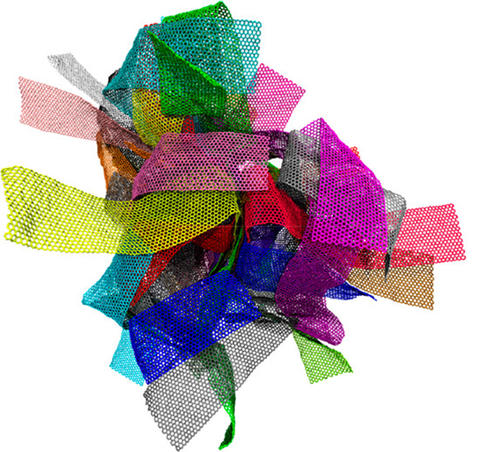

In January of 2018, a NIST research team led by Jack Douglas and funded through the MGI set out to answer to a few of those questions in a paper written by Douglas and Wenjie Xia, which appeared in ACS Nano. By simulating a melt of graphene sheets, they discovered that, if heated above 1600 K (roughly 1327 C or 2420 F), the material would be transformed into a viscous liquid state, with the sheets themselves crumpling like paper, and that the graphene would become “foam-like” upon cooling into a glass state. Those qualities would make it an excellent lubrication material at high temperatures, and a possible filtration material at room temperature. The material may also prove to be a powerful fire suppressant.

This computer model shows what happens when you melt graphene sheets in a liquid and then freeze the liquid. The melted graphene sheets crumple like paper into a foam-like substance. This foamy material is potentially useful as filters and sensors (if you coat them) and insulators (since the holes don’t conduct much heat). Each sheet is colored differently to aid the visualization.

That information could prove extremely helpful to others around the world who are anxious to use graphene in manufacturing. MGI is using artificial intelligence (AI) to help researchers discover applications that exploit the novel properties of these materials.

Although many people think of AI as the power behind robotic vacuums and other household items, artificial intelligence broadly refers to anything that machines are doing that was once accomplished only by humans. Machine learning, which is a subset of AI, is the process that seeks patterns in datasets and uses those patterns to predict or classify what is or is not important. The learning happens when the computational approach improves itself through trial and error.

That once seemed like science fiction, but machine learning has lately developed into a wide-ranging field of real-life study, as researchers have gained a better handle on the right ways to apply AI to real-world problems.

“When you are looking to design a material, you have a lot of options,” explains Begum Gulsoy, the associate director of the Center for Hierarchical Materials Design (CHiMaD), a NIST-sponsored, Chicago-based center of excellence for advanced materials research established in 2014. “It is sort of like trying to find a microscopic dot within a big box. What you are trying to do is make educated guesses which will bring you closer to the answer, so the search becomes much smaller. AI and machine learning can guide the design process such that it gets us closer to the points we’re searching for. Once we experiment we can actually work with much more accuracy than we would otherwise.”

CHiMaD brings together a wide range of industrial partners and advisers to focus on MGI goals, including companies working on structural engineering, semiconductors, software and IT services, chemical engineering and even candy making. The center is particularly interested in sharing expertise and teaching students about materials design and MGI principles that they can later use in industrial or academic settings. To date the center has worked with more than 300 undergraduates, graduates, and postdoctoral researchers and professionals, educating them about the value of exploring new materials and of sharing what they’ve learned with others in both the public and private sectors to advance the entire field of materials for the future.

“Many companies see value in being able to do materials design, which tells you something about the applicability of MGI to different concepts and industrial settings,” says Gulsoy. “It comes down to being able to pinpoint what exactly are your requirements and constraints. You are all, after all, designing a material whether you are trying to make the smoothest chocolate or the strongest metal alloy … the trail of thoughts is the same.”

But teaching computers to work with the data poses lots of challenges.

“There’s a plethora of machine learning methods available today,” says Bob Hanisch, a NIST research scientist who specializes in data storage and retrieval. “But there are lots of pitfalls. … Without context, the results of any sort of machine learning algorithm are just going to be garbage.”

Too little data can give you a false sense of confidence in outcomes. Without enough exemplars, some subtle differences might be missing, Hanisch says. “And that is often the case in materials science; there just is not that much existing data on the materials in question.”

To illustrate his point, Hanisch offers an infamous cautionary tale from biology. An algorithm was developed to differentiate dog pictures from wolf pictures with a high rate of accuracy. When humans went back and examined the photos, they realized that the wolf pics were all set in the snow, purely by coincidence. What the computer recognized was the setting, not anything really about the dogs or the wolves themselves, Hanisch explains. Both the quality of the data and the context were lacking, making the results robust, but faulty.

Similarly, images of the microstructures of materials can be labeled according to their properties and performance capabilities and then cataloged. After a while, a given machine learning system will begin to detect patterns. But that’s just a data-gathering exercise. Machine learning algorithms know nothing about the physical world unless we tell them about it, so finding contextual information to feed into the system is key. And for materials, the information can be much more complex than just “snow” or “no snow.”

For materials, that means many of the same things Jim Warren talked about with his steel samples: the list of ingredients, the manufacturing process, “that is, the soufflé recipe,” Warren says. It also means the things that Douglas’ and Campbell’s research groups discovered when working with nickel and graphene: quench time, crumpling dynamics, and stability at high temperatures.

Once data needs have been identified, materials scientists use models to narrow the knowledge gaps. The idea is to accelerate the rate of discovery by merging experiments and simulations.

Sharing all the discoveries is far more complex than just tossing information out into the World Wide Web for anyone to find accidentally. Researchers don’t always use the same terminology to describe identical topics, processes and material components.

To help, NIST has been developing a web-based materials resource registry, and publishers of data who want to submit their work must use a predefined vocabulary from a list that Hanisch and his team have developed.

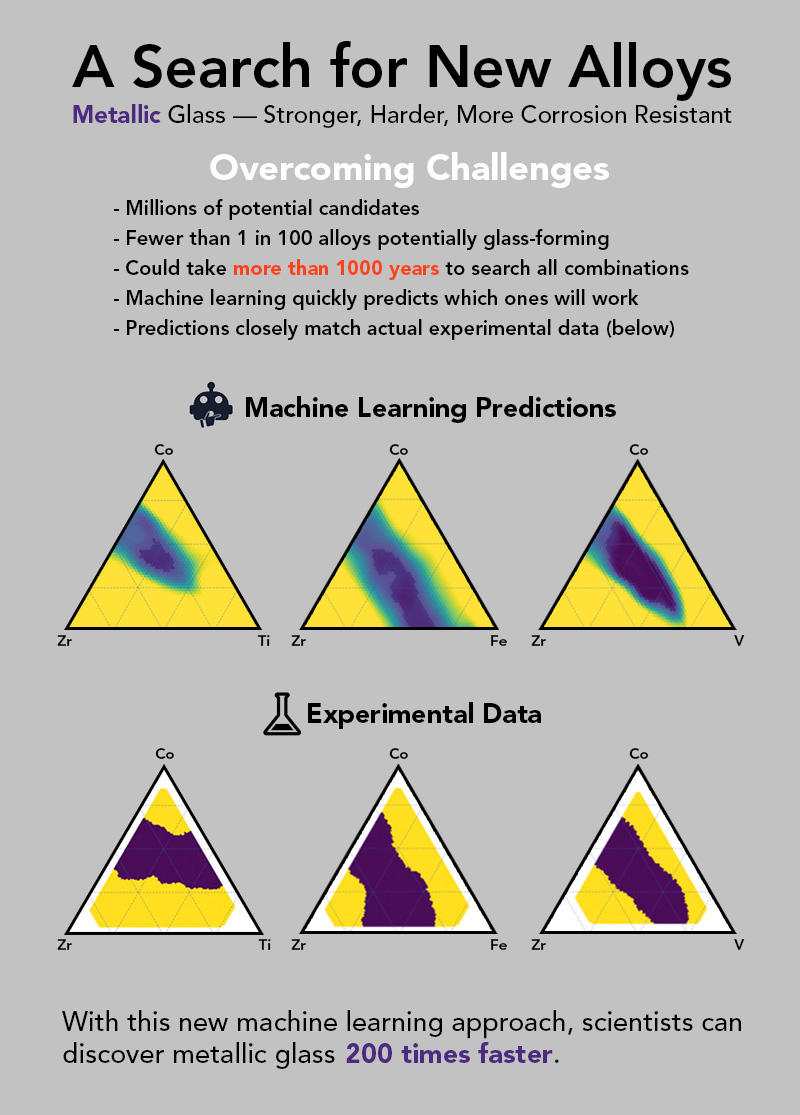

NIST scientists have also been collaborating with other research institutions and private companies to develop databases. This past year, for example, a team that included researchers from NIST, the Department of Energy’s SLAC National Accelerator Laboratory, Northwestern University and a Silicon Valley-based company, Citrine Informatics, announced it had been able to use machine learning techniques to find a shortcut for discovering and improving metallic glass at a fraction of the time and cost previously estimated for the material.

Although rarely discussed outside the worlds of applied physics and materials science, metallic glass has become a kind of holy grail for some research teams. Most metals have an ordered atomic structure, making them rigid. Glasses, whether metallic or the more familiar silicate materials that are fashioned into conventional glassware, are amorphous, with a more randomized molecular structure that resembles a liquid more than a solid. Unlike conventional glass, however, this novel material can conduct electricity, is extremely strong and may make excellent coatings for steel or other building materials.

To date, scientists have not identified a truly predictive model detailing why one mixture of elements will form a glass, while a different mixture of the same or similar elements will not. Over the last 50 years, scientists have identified and tabulated about 6,000 combinations of various materials in the quest to make the stuff, with only incremental amounts of progress. Using machine learning, the MGI-funded team was able to make and screen more than 20,000 new metals for their ability to form glasses in a single year, an accomplishment which was then detailed in a paper published in Science Advances in April 2018.

It was helpful to have a private company involved in the metallic glass project, says Warren, the MGI lead. “They own confidence in AI. They also have experience in industry and they know what industry needs.”

But having MGI exist as a public-sector venture is an important way to remove risk for companies that want to do new materials work. Just like building the internet, there’s a big return on government investment when the infrastructure is available to everyone who wants to use it.

“MGI is essentially a very similar kind of argument for infrastructure,” he says, noting that a 2018 report found that the initiative could deliver between $123 billion and $270 billion annually to the U.S. economy. “Once we’ve built it, all sorts of new business models will crop up. And that’s a good thing for growing the materials field.”