Summary

The human brain does some types of information processing, like speech recognition, image recognition, or video processing, much more efficiently than can be done with modern computers. Neuromorphic computing is the field of making computing more efficient by taking inspiration from how the brain works. There is strong evidence that parts of the brain process information based on the timing of events, like neural spikes. Standard integrated circuits can be adapted to perform time-based computing to efficiently solve big-data processing and machine learning tasks efficiently. We are extending time-based computing in important ways by studying new device and memory technologies, exploring new mathematical frameworks and building demonstrations of time-based computing architectures.

Description

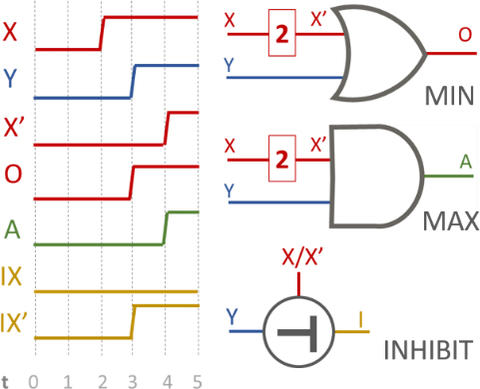

Fig. 1. Time re-envisioned: not a by-product of computation, but the domain in which information is encoded, leading to different mathematical functions becoming energy efficient primitives.

In standard integrated circuits, information that is coded as ones and zeros is implemented by voltages on wires being high or low. The circuits consume energy during transitions between these voltages. Binary numbers have a voltage per bit so there are a lot of transitions each time a number changes. In “race logic” information is not encoded in these bits but rather in the time at which a voltage changes from low to high. This allows more than one bit of information to be encoded on a single wire. Such an approach has lower precision than the usual binary representation of the information but can operate faster, at much lower energy, and with a smaller area on the integrated circuit.

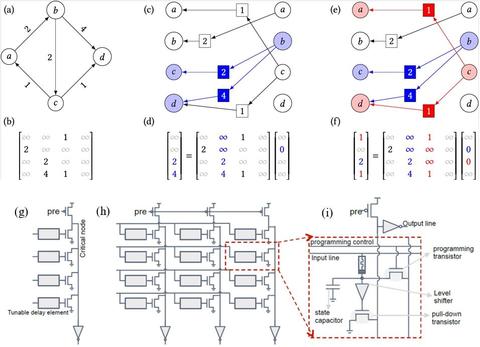

In race logic, information is encoded in wavefronts. A wavefront is a set of transitions in a series of wires. The relative arrival times between the transitions can be incremented or decremented with delay elements. Simple logic gates like OR-gates or AND-gates determine which transition arrives first or last. As the wavefront propagates through the circuit, different parts move faster or slower depending on the information being processed. The final answer is determined by the signal that wins the race.

The simplicity of the unit cells makes race logic especially powerful for problems that can be mapped to spatially regular data structures such as graphs, trees, and convolutional layers in neural networks. We have studied all of these options. Decision-tree-based models are useful for making categorical decisions on image data, while convolutional layers are essential for various types of modern deep neural networks. When accelerated with race logic, they achieve accuracy only a percent below the state of the art at a one to two-order-of-magnitude lower energy cost.

Race logic not only works well with conventional integrated circuits but also in novel low-temperature computing environments based on superconductors. In such computing approaches, the physical encoding is naturally spike-based and hence lends itself well to temporal coding approaches. While low- temperature computers are unlikely to be important in consumer electronics, they could play an important role in data centers because they can be much faster and lower energy than existing electronics. We are exploring superconducting circuits that would be needed for such applications.

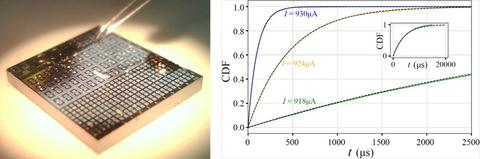

Making race logic useful for solving an even broader class of problems requires a way to generate stochastic signals in the temporal domain. Creating such signals requires interrogating a physical system that displays stochastic behavior in the time domain. The superparamagnetic tunnel junction is one such device that is known to exhibit exponentially distributed switching times. By using a temporally encoded measurement circuit and by applying appropriately sized current pulses, we can sample from the exponential dwell time distribution that is exhibited by these devices. Figure 3 shows a chip developed by our collaborators at SPINTEC, which contains superparamagnetic tunnel junctions of various diameters and the experimentally distributed timing signals that can be generated by them. This allows probabilistic computing operations to be performed in the time domain allowing for increased speed and energy-efficiency.

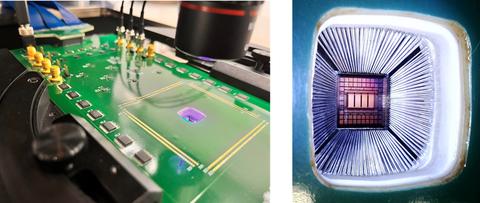

Race logic is a technique that utilizes digital rising edges as a substitute for spikes in biological systems, hence best suited for biologically inspired computing paradigms. We are currently experimenting with these techniques to perform inference operations in neural networks using our memory chip, as illustrated in Figure 4. By adopting a temporal approach to read the states of devices integrated into a chip, we can enhance performance by eliminating the need for analog-to-digital and digital-to-analog converters.

Other Publications

R. Gretsch, P. Song, A. Madhavan, J. Lau, and T. Sherwood, “Energy Efficient Convolutions with Temporal Arithmetic.” In Proceedings of the 29th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, Volume 2, pages 354 – 368, doi: 10.1145/3620665.3640395

G. Tzimpragos, J. Volk, D. Vasudevan, N. Tsiskaridze, G. Michelogiannakis, A. Madhavan, J. Shalf, T. Sherwood, "Temporal Computing With Superconductors," IEEE Micro, vol. 41, no. 3, pp. 71-79, 1 May-June 2021, doi: 10.1109/MM.2021.3066377.

G. Tzimpragos, D. Vasudevan, N. Tsiskaridze, G. Michelogiannakis, A. Madhavan, J. Volk, J. Shalf, T. Sherwood, "A Computational Temporal Logic for Superconducting Accelerators," ASPLOS '20: Proceedings of the Twenty-Fifth International Conference on Architectural Support for Programming Languages and Operating Systems, March 2020, Pages 435–448, doi: 10.1145/3373376.3378517.