Strategic Vision

This summary provides an overview of the U.S. Artificial Intelligence Safety Institute (US AISI)’s Strategic Vision document. Get the full text here.

EXECUTIVE SUMMARY

The Strategic Vision document describes US AISI’s philosophy, mission, and strategic goals. Rooted in two core principles—first, that beneficial AI depends on AI safety; and second, that AI safety depends on science—the AISI aims to address key challenges, including a lack of standardized metrics for frontier AI, underdeveloped testing and validation methods, limited national and global coordination on AI safety issues, and more.

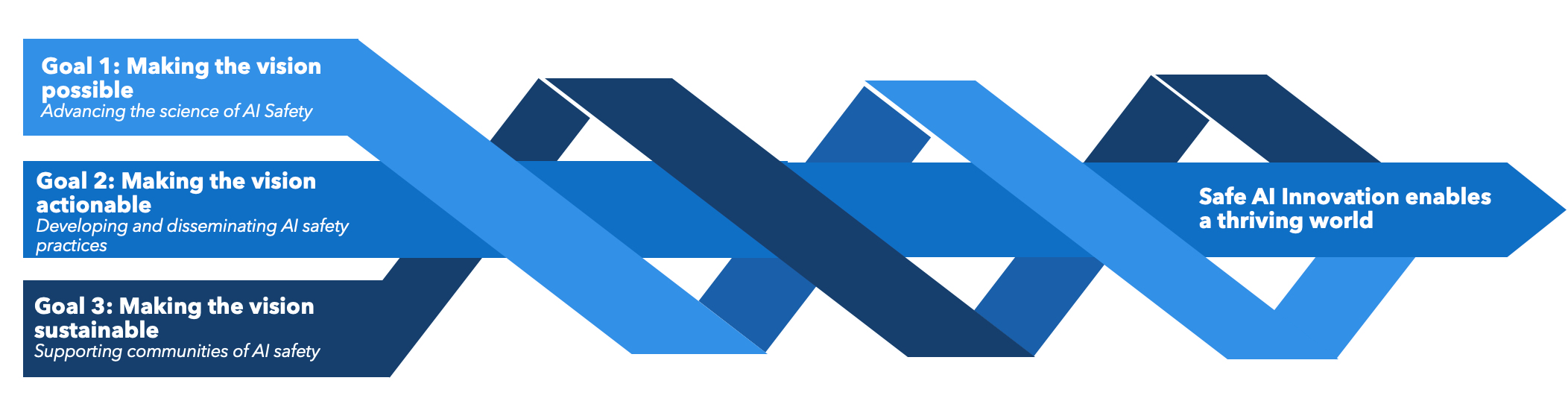

US AISI will focus on three key goals:

- Advance the science of AI safety;

- Articulate, demonstrate, and disseminate the practices of AI safety; and

- Support institutions, communities, and coordination around AI safety.

To achieve these goals, US AISI plans to, among other activities, conduct testing of advanced models and systems to assess potential and emerging risks; develop guidelines on evaluations and risk mitigations, among other topics; and perform and coordinate technical research. The U.S. AI Safety Institute will work closely with AI industry, civil society members, and international partners to achieve these objectives.