Time and Frequency from A to Z, Ti

The designation of an instant on a selected time scale, used in the sense of time of day; or the interval between two events or the duration of an event, used in the sense of time interval.

An oscillator found inside an electronic instrument that serves as a reference for all of the time and frequency functions performed by that instrument. The time base oscillator in most instruments is a quartz oscillator, often an OCXO. However, some instruments now use rubidium oscillators as their time base.

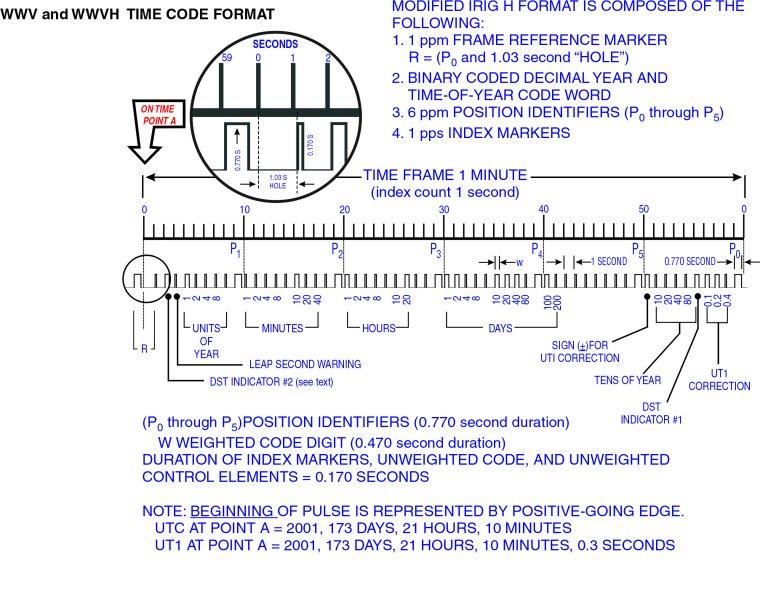

A code (usually digital) that contains enough information to synchronize a clock to the correct time-of-day. Most time codes contain the UTC hour, minute, and second; the month, day, and year; and advance warning of daylight saving time and leap seconds. NIST time codes can be obtained from WWV, WWVH, WWVB, ACTS, and the Internet Time Service, and other systems such as GPS have their own unique time codes. The format of the WWV/WWVH time code is shown in the graphic.

A statistic used to estimate time stability, based on the Modified Allan deviation. The time deviation is particularly useful for analyzing time transfer data, such as the results of a GPS common-view measurement.

The measurement domain where voltage and power are measured as functions of time. Instruments such as oscilloscopes and time interval counters are often used to analyze signals in the time domain.

The elapsed time between two events. In time and frequency metrology, time interval is usually measured in small fractions of a second, such as milliseconds, microseconds, or nanoseconds. Coarse time interval measurements can be made with a stop watch. Higher resolution time interval measurements are often made with a time interval counter.

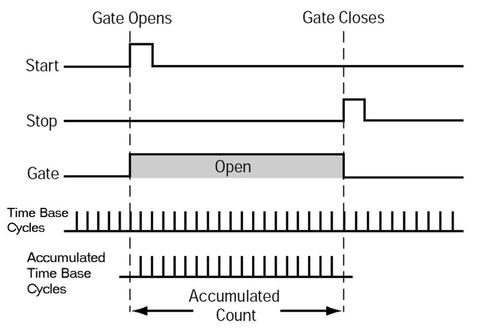

An instrument used to measure the time interval between two signals. A time interval counter (TIC) has inputs for two electrical signals. One signal starts the counter and the other signal stops it. All TIC's contain several basic parts known as the time base, the gate, and the counting assembly. The time base provides evenly spaced pulses used to measure time interval. The gate controls when the time interval count begins and ends. Pulses passing through the gate are routed to the counting assembly. The counter can then be reset (or armed) to begin another measurement. The stop and start inputs are usually provided with controls that determine the trigger level at which the counter responds to input signals. The TIC begins measuring time interval when the start signal reaches its trigger level and stops measuring when the stop signal reaches its trigger level. The time interval is measured by counting cycles from the time base, as shown in the illustration.

The most important specification of a TIC is resolution. In simple TIC designs, such as the one in the illustration, the resolution is limited to the period of the TIC’s time base frequency. For example, a TIC with a 10 MHz time base would be limited to a resolution of 100 ns. This is because the simplest TIC designs count whole time base cycles to measure time interval. To improve this situation, some TIC designers have multiplied the time base frequency to get more cycles and thus more resolution. For example, multiplying the time base frequency to 100 MHz makes 10 ns resolution possible, and 1 ns counters have even been built using a 1 GHz time base. However, modern counters increase resolution by detecting parts of a time base cycle through interpolation or digital signal processing. These methods have made 1 ns TICs commonplace, and even 1 picosecond TICs are available.

TICs are also commonly used to measure frequency in the time domain. However, when a TIC is used to measure frequency, it is not practical to work directly with standard frequency signals, such as 10 MHz. Instead, low frequency input signals must be used to start and stop the counter. As shown in the diagram, the solution is to use a frequency divider to convert standard frequency signals to a lower frequency, usually 1 Hz. The use of low-frequency signals reduces the problem of counter overflows and underflows (cycle ambiguity) and helps prevent errors that can occur when the start and stop signals are too close together.

The information displayed by a clock or calendar, usually including the hour, minute, second, month, day, and year. Time codes derived from a reference source such as UTC(NIST) are often used to synchronize clocks to the correct time of day.

The difference between a measured on-time pulse or signal, and a reference on-time pulse or signal, such as UTC(NIST). Time offset measurements are usually made with a time interval counter. The measurement result is usually reported in fractions of a second, such as milliseconds, microseconds, or nanoseconds.

An Internet time code protocol defined by the RFC-868 document and supported by the NIST Internet Time Service. The time code is sent as a 32-bit unformatted binary number that represents the time in UTC seconds since January 1, 1900. The server listens for Time Protocol requests on port 37, and responds in either TCP/IP or UDP/IP formats. Conversion from UTC to local time (if necessary) is the responsibility of the client program. The 32-bit binary format can represent times over a span of about 136 years with a resolution of 1 second. There is no provision for increasing the resolution or increasing the range of years.

An agreed upon system for keeping time. All time scales use a frequency source to define the length of the second, which is the standard unit of time interval. Seconds are then counted to measure longer units of time interval, such as minutes, hours, and days. Modern time scales such as UTC define the second based on an atomic property of the cesium atom, and thus standard seconds are produced by cesium oscillators. Earlier time scales (including earlier versions of Universal Time) were based on astronomical observations that measured the frequency of the Earth's rotation.

A device that produces an on-time pulse that is used as a reference for time interval measurements, or a device that produces a time code used as a time-of-day reference.

A measurement technique used to send a reference time or frequency from a source to a remote location. Time transfer involves the transmission of an on-time marker or a time code. The most common time transfer techniques are one-way, common-view, and two-way time transfer.

A geographical region that maintains a local time that usually differs by an integral number of hours from UTC. Time zones were initially instituted by the railroads in the United States and Canada during the 1880's to standardize timekeeping. Within several years the use of time zones had expanded internationally.

Ideally, the world would be divided into 24 time zones of equal width. Each zone would have an east-west dimension of 15° of longitude centered upon a central meridian. This central meridian for a zone is defined in terms of its position relative to a universal reference, the prime meridian (often called the zero meridian) located at 0° longitude. In other words, the central meridian of each zone has a longitude divisible by 15°. When the sun is directly above this central meridian, local time at all points within that time zone would be noon. In practice, the boundaries between time zones are often modified to accommodate political boundaries in the various countries. A few countries use a local time that differs by one half hour from that of the central meridian.

Converting UTC to local time, or vice versa, requires knowing the number of time zones between the prime meridian and your local time zone. It is also necessary to know whether Daylight Saving Time (DST) is in effect, since UTC does not observe DST. The table shows the difference between UTC and local time for the major United States time zones.

| Time Zone | Difference from UTC During Standard Time | Difference from UTC During Daylight Time |

|---|---|---|

| Pacific | -8 hours | -7 hours |

| Mountain | -7 hours | -6 hours |

| Central | -6 hours | -5 hours |

| Eastern | -5 hours | -4 hours |