A Brief History of Atomic Time

Since the first societies, humans have needed to keep track of time. Ancient farmers needed to know when to plant and harvest crops. People developed calendars to keep track of religious rituals. As human societies became more technological and complex, so did the demands for accurate timekeeping: workdays and markets needed to be coordinated; train schedules needed to be synchronized. No wonder time has long obsessed scientists, philosophers and inventors.

In practical terms, keeping time requires just two things: something that oscillates with a steady beat and something that counts the beats and displays the time. Ancient Egyptians invented the world’s first known timepieces, using the regular movement of the Sun’s shadows to track the course of the day. Keeping time at night was more challenging.

From the Renaissance onward, clockmakers developed ever more sophisticated clocks based on mechanical devices like springs and pendulums. Nearly a century ago, the quartz oscillator was invented, heralding the age of electronic clocks.

But as the seconds ticked by, these clocks inevitably ran fast or slow. And no two mechanical or electrical clocks were identical, meaning their ticks of time were inevitably a bit off from one another. As nations stitched themselves together into a global economy and community, they needed a single, consistent, unambiguous and accurate way to measure and share time. Out of that need, a new kind of clock was born.

A Bold Idea

The atomic clock was a dream long before it was a reality. The dream started in the 1870s, when famed British scientists James Clerk Maxwell and William Thompson (better known as Lord Kelvin) proposed a bold and radical new idea.

Physicists had recently discovered that atoms — the microscopic building blocks of matter — absorb and emit light waves of specific frequencies: the number of wave peaks that pass a particular spot in one second, as the light wave travels through space. Atoms of a specific element, Maxwell and Thompson reasoned, are identical to one another and never change, so the frequencies of light they absorb and emit shouldn’t either. Atoms could, in theory, be used to make perfect clocks.

Thompson and a colleague described the idea in a paper in 1879, writing:

The recent discoveries due to the Kinetic theory of gases and to Spectrum analysis (especially when it is applied to the light of the heavenly bodies) indicate to us natural standard pieces of matter such as atoms of hydrogen or sodium, ready made in infinite numbers, all absolutely alike in every physical property. The time of vibration of a sodium particle corresponding to any one of its modes of vibration is known to be absolutely independent of its position in the universe, and it will probably remain the same so long as the particle itself exists.

The atomic clock was a farsighted notion — one that, in some ways, predicted the quantum theory that wouldn’t be fully fleshed out for another half-century. And the actual technology needed to control atoms and turn them into timekeepers wouldn’t be invented until decades after that.

Maxwell and Thompson had peered into the future of time.

The Atomic Clock Emerges

The atomic clock truly began to take shape in the late 1930s and early 1940s, as the world careened toward war. In 1939, Columbia University physicist Isidor Isaac Rabi suggested that scientists at the National Bureau of Standards (NBS), which later became the National Institute of Standards and Technology (NIST), use his team’s newly developed molecular beam magnetic resonance technique as a time standard. At the time, the U.S. was relying for its time standard on quartz oscillators, which were neither accurate nor stable sources of time.

In 1940, Rabi took an important step toward the atomic clock. He measured the frequency at which cesium atoms naturally absorb and emit microwaves — what’s known as the cesium “resonant frequency” — to be around 9.1914 billion cycles per second. Rabi was awarded the 1944 Nobel Prize in Physics for his innovations. World War II temporarily diverted Rabi’s and many other physicists’ efforts away from clocks. But it also catalyzed radar technology that gave scientists powerful new ways to generate and measure microwave radiation — capabilities that would prove crucial to this new timekeeper.

In January 1945, as the war neared its end, Rabi spoke at a meeting of the American Physical Society and American Association of Physics Teachers about the promise of atomic timekeeping. New York Times science writer William Laurence covered the talk, evocatively describing Rabi’s proposed clock as a “cosmic pendulum” that would tap into “radio frequencies in the hearts of atoms.”

Two years later in 1947, 35-year-old NBS physicist Harold Lyons picked up the baton. Rather than adopt Rabi’s suggestion of cesium, however, Lyons chose to measure the resonance of ammonia, a molecule with three hydrogen atoms bonded to one nitrogen.

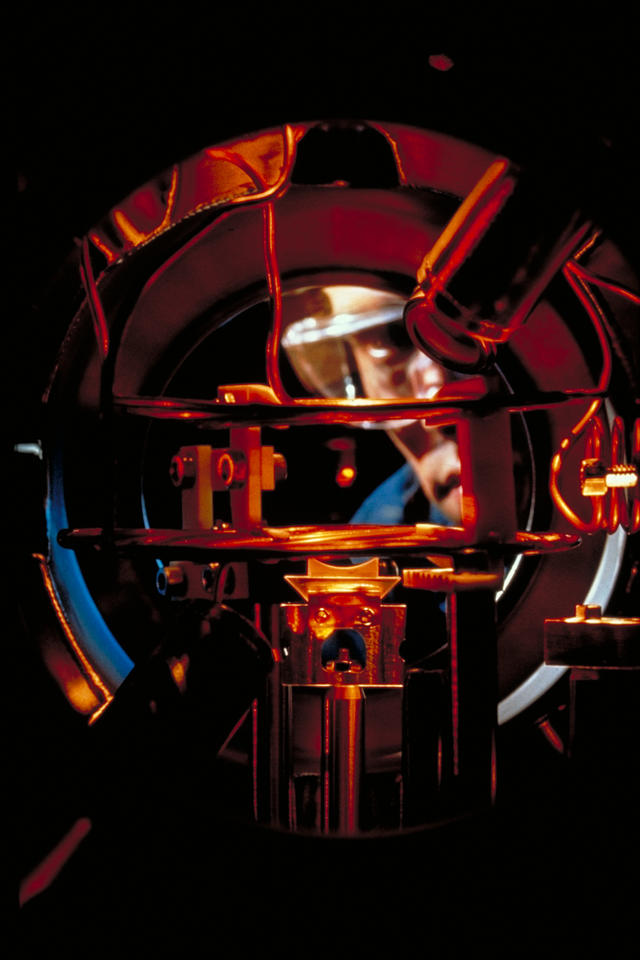

On Aug. 12, 1948, in the NBS laboratory in northwest Washington, D.C., Lyons and his colleagues trapped a cloud of ammonia molecules in a 9-meter-long copper cell and immersed them in microwaves. As the researchers tried different microwave frequencies, they hit upon one that caused the molecules to absorb and re-emit a maximum amount of radiation.

The team then counted the radiation’s wave peaks and manually transferred the count to a quartz crystal. The process resembled how you might tune a guitar: You hear a reference pitch and tighten or loosen a string until it plays that pitch. Lyons and his colleagues were able to define the second as the time it took ammonia molecules to emit roughly 23.9 billion cycles of microwave radiation.

Lyons unveiled his clock publicly in early 1949. He attached to it a traditional clock with a face, so the world would know that the strange, refrigerator-sized cabinet of dials and readouts was, in fact, a clock.

Sadly, the ammonia clock ultimately proved no more accurate or precise than existing ones. And Lyons himself did not seem to fully appreciate its potential, describing it as a research tool rather than a technology for broader society. In 1950, he wrote, “It is doubtful that atomic time would be used for civil purposes, since man’s activities will always be controlled by the rising and setting of the sun and the seasons.” Lyons also believed navigators would continue to rely on the stars. On both counts, he would be proved mostly wrong.

Regardless, Lyons’ invention did something crucial: It proved that Maxwell’s and Thompson’s dream of atomic timekeeping was no mere physicist flight of fancy. The long-awaited atomic clock was finally real.

A Special Atom

Lyons’ clock also made clear that the ammonia molecule would not be the future of timekeeping. His team soon abandoned ammonia for Rabi’s recommended element: the soft, gold-hued metal known as cesium. A large and heavy atom, cesium is relatively slow and easy to control. Its internal energy levels are easy to manipulate with microwaves and resist being disturbed by external magnetic fields.

But budget cuts and a relocation of the NBS clock group to Boulder, Colorado, stalled the American effort. Louis Essen, a physicist at the National Physical Laboratory (NPL) in the U.K. who had visited and learned from the American clock labs, eagerly filled the void. Essen developed a clock that used a design invented in 1949 by Harvard physicist Norman Ramsey, who won a Nobel Prize for it 40 years later.

Essen’s device sent a beam of hot cesium atoms through two successive microwave fields separated by nearly 50 centimeters. Unveiled in May 1955, the timepiece was the first atomic clock stable enough to be used as a time standard. Essen immediately grasped the full implications of what he had created.

“We invited the director” of NPL, Essen later wrote, “to come and witness the death of the astronomical second and the birth of atomic time.”

Essen moved quickly to establish the primacy of the atomic second. He struck up a collaboration with William Markowitz, director of the time services department at the U.S. Naval Observatory in Washington, D.C., just down the road from NBS. Markowitz calculated the duration of a 1950s-era astronomical second based on measurements of the Moon’s motion. Essen and an NPL colleague then counted the cycles of radiation absorbed and emitted by cesium atoms during one of those seconds.

The resonant frequency that Essen and Markowitz published in 1958 — 9,192,631,770 cycles per second, not far from Rabi’s 1940 measurement — had an error of around 20 parts per billion, far below that of the astronomical measurement. It was clear that the world’s time would soon be coming not from astronomers monitoring stars and planets, but from physicists manipulating atoms.

Atomic scientists in other countries, meanwhile, quickly caught up to their British peers. By 1959, NIST had built two high-performance cesium atomic beam clocks that could be precisely compared. NBS-1 became the U.S.’s official frequency standard in 1959. It was soon replaced by NBS-2 in 1960. Metrology institutes — national laboratories dedicated to measurement science — in France, Germany, Canada, Japan and Switzerland also built cesium clocks.

NBS-1 and NBS-2 had accuracies of 10 parts per trillion or better. To put that in more familiar terms, neither clock would have gained or lost even one second had it run continuously for 3,000 years.

By 1966, their successor NBS-3 had achieved an accuracy 10 times better and wouldn’t have gained or lost a second in 30,000 years. In less than two decades, the atomic clock had far outstripped every timepiece that had come before.

Private industry got in on the act, too. In the fall of 1956, the National Company, based in Malden, Massachusetts, came out with the cleverly named “Atomichron”: a commercial cesium beam clock based on the design of an MIT physicist named Jerrold Zacharias. The first units, each of which weighed 350 pounds and towered more than 2 meters tall, were sold to the U.S. Naval Research Laboratory for $50,000.

In the end, the expensive and unwieldy Atomichron was a commercial flop. But in 1964, Hewlett-Packard came out with a compact cesium beam clock that proved far more successful. An updated design is still sold today by Microchip; NIST and many other metrology institutes use these clocks in the ensembles that produce their time scales. (A time scale is an agreed-upon system for keeping time using data from clocks around the world.)

A new kind of atomic clock based on the element rubidium — one row up from cesium in the periodic table — came out a few years after the cesium clock. While never as accurate as cesium clocks, rubidium clocks could be made much smaller and cheaper, making them popular for applications where space or cost is at a premium.

A New Second

With the advent of super-accurate atomic clocks, the second — the fundamental unit of time — was ripe for a makeover. Until now, the second had been defined in terms of either the length of the day or the length of a year. Neither was ideal: The day length changes unpredictably as Earth’s rotation slows and speeds up, whereas the year, while more stable, is awkward and hard to measure.

Choosing which element to yoke the second to, however, was fraught. In 1960, Ramsey had developed a hydrogen-based clock in his lab at Harvard. For a few years, it seemed Ramsey’s hydrogen maser (the “maser” is essentially the microwave equivalent of the laser) would dethrone the cesium beam clock as the world’s most accurate timepiece.

But the maser had a weakness: The ultralight hydrogen atoms inevitably collided with the chamber walls, causing their apparent resonant frequencies to shift and ultimately limiting the device’s accuracy. In the end, cesium won the day — or perhaps we should say, cesium won the second.

In 1967, the General Conference on Weights and Measures — the body that oversees the International System of Units (SI) — redefined the second according to Essen’s atomic measurement. The second officially became the duration of 9,192,631,770 cycles of microwave radiation corresponding to what is known as the “hyperfine energy transition” in the isotope of cesium known as cesium-133.

While most people noticed no immediate changes, the redefinition was nevertheless a profound moment for humanity. For the first time, the fundamental unit of time was tied not to stars in the heavens but to atoms on Earth.

The Atomic (Clock) Age

There remained a lot to be done. The new cesium clocks, while better than any that had come before, were still far from perfect. Scientists continued to root out and minimize sources of error such as temperature variations and unwanted energy transitions. By 1975, NIST’s NBS-6 atomic clock was accurate and stable enough to neither gain nor lose a second in 400,000 years.

Launched nearly two decades later, in 1993, NIST’s NIST-7 atomic clock was significantly more accurate and wouldn’t have gained or lost a second in 6 million years.

You don’t need this kind of precision for your wristwatch. But being able to measure such tiny fractions of a second opened up technological applications that would have been unimaginable just decades earlier. Atomic clocks spurred advances in timekeeping, communication, metrology, and advanced positioning and navigation systems.

Most famously, atomic clocks enabled satellite-based positioning, which, despite Lyons’ skepticism, has replaced the stars as the world’s navigation system of choice. The U.S. Navy started sending experimental atomic clocks into space in 1967. In 1977, it launched the GPS satellite network. Each satellite had on board both cesium- and rubidium-based clocks. Those clocks were steered by even more accurate atomic clocks on the ground.

Today, billions of people consult GPS on their phones and devices. The 31 currently operating GPS satellites, which orbit at just over 20,000 kilometers above Earth, each carry four rubidium-based atomic clocks that are synchronized daily with atomic clocks on the ground and with each other. GPS satellites continually broadcast their positions along with the time. When a GPS unit in a car, phone or other device receives signals from four of these satellites, it can use the time and position signals to determine where it — and thus we — are on the globe.

And GPS no longer has the sky to itself. Russia, the EU, Japan and China have each launched networks of satellites providing atomically accurate time and positioning signals. Thanks to atomic clocks, navigating the world we live in has never been easier.

Putting Atoms on Ice

The next frontier in atomic timekeeping required doing something physicists had long dreamed of — taking full control of atoms by cooling them to frigid temperatures. In “hot clocks” like the beam clocks NIST and other metrology institutes were using to determine the official second, room-temperature atoms zip through microwave fields at several hundred meters per second. This limits the clock’s accuracy by making the energy transitions a bit “blurry” and hard to measure precisely. In other words, the cesium atoms’ motion slightly smears out the frequency of microwave radiation needed to induce an energy transition.

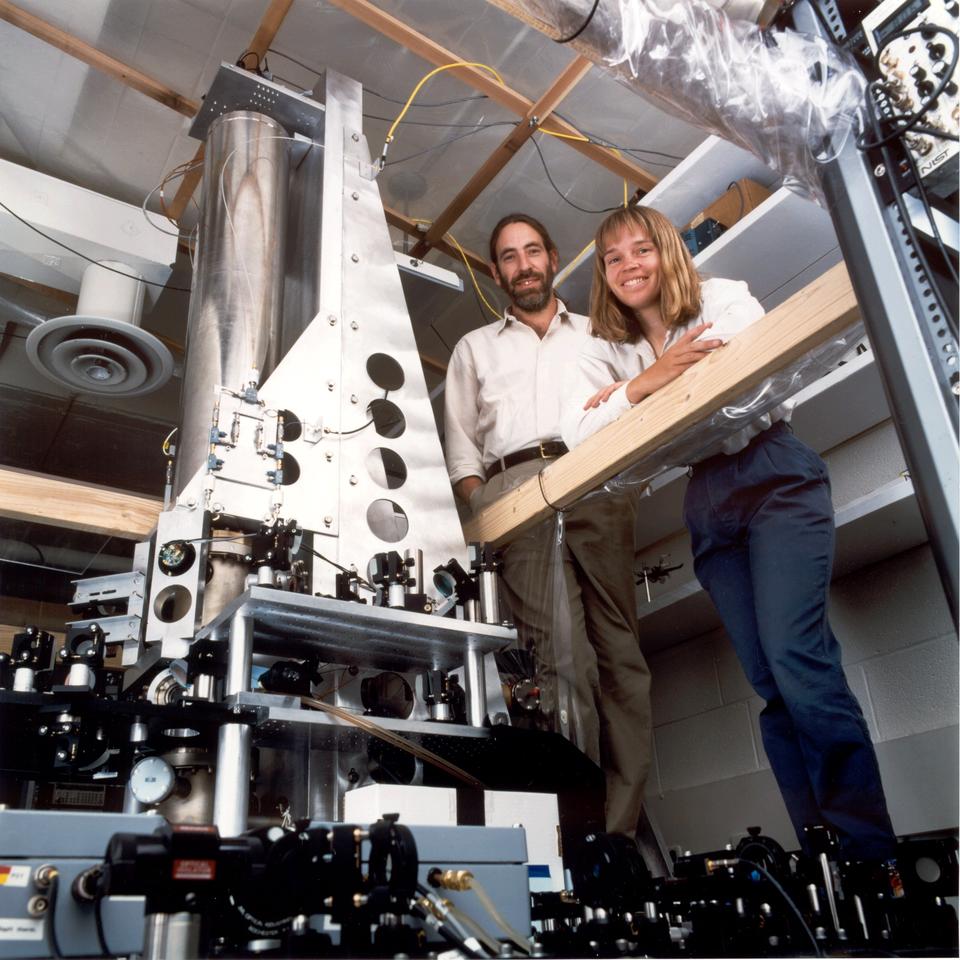

A key breakthrough came in the 1980s, when NIST physicist Bill Phillips and colleagues used lasers to slow cesium atoms to just a few centimeters per second. This makes the atoms far easier to control and use to tune microwaves to the right frequency. For his groundbreaking achievement, Phillips shared the 1997 Nobel Prize in Physics.

Stanford University physicist Steven Chu and others took the next big step and built the first laser-cooled “atomic fountain,” picking up on a design Zacharias (the designer of the Atomichron) had come up with in the 1950s. In Chu’s team’s fountain clock, lasers trapped and cooled sodium atoms to near absolute zero, then tossed them gently upward. The atoms’ slow trajectory up and back down provided a much longer period for scientists to probe them with microwaves. This design heralded another leap in clock accuracy and earned Chu a portion of the 1997 Nobel Prize in Physics.

Nowadays, most fountain clocks use cesium or rubidium atoms. France’s metrology institute, SYRTE, was the first to use a cesium fountain clock for timekeeping. A few years later, NIST scientists built the agency’s first fountain clock, NIST-F1, which started operating in 1999; the German metrology institute PTB also brought one online around this time.

Accurate to within one second in 20 million years, NIST-F1 was on par with France’s fountain clock and more than three times as accurate as NIST-7.

NIST-F2, launched in 2014, was about three times more accurate than the final iteration of F1, meaning it would neither gain nor lose a second in 300 million years. Among other improvements, this fountain clock included a cryogenically cooled chamber to further reduce frequency shifts.

NIST-F2 was cumbersome to run as a frequency standard, however, because it needed to be cooled using liquid nitrogen to around minus 200 degrees Celsius. So in 2015, it was retired. But it helped scientists understand how cooling fountain clocks can improve accuracy, and Italy’s metrology institute, INRIM, continues to operate a chilled clock similar to NIST-F2. (Learn more about how NIST-F2 worked.)

Currently, 10 countries — Canada, China, Germany, France, Italy, Japan, Russia, Switzerland, the U.K. and the United States — measure the second using cesium fountain clocks and send data to the International Bureau of Weights and Measures (BIPM), located on international territory outside Paris. Meanwhile, the U.S. Naval Observatory runs several rubidium fountain clocks at its labs in Washington, D.C., and outside Colorado Springs, Colorado, to produce the time scale that steers GPS and provides positioning and navigation to the world.

Going Optical

Even as they honed cesium clocks into the best timepieces the world had ever seen, clock makers started dreaming of a new generation of atomic clocks. These clocks would leave behind cesium, the timekeeping workhorse of the last half-century, for atoms with energy transitions in the visible part of the electromagnetic spectrum. Visible light waves pack 100,000 times as many peaks and valleys into a second as microwaves do, so they can chop time into far smaller pieces and make far more accurate measurements.

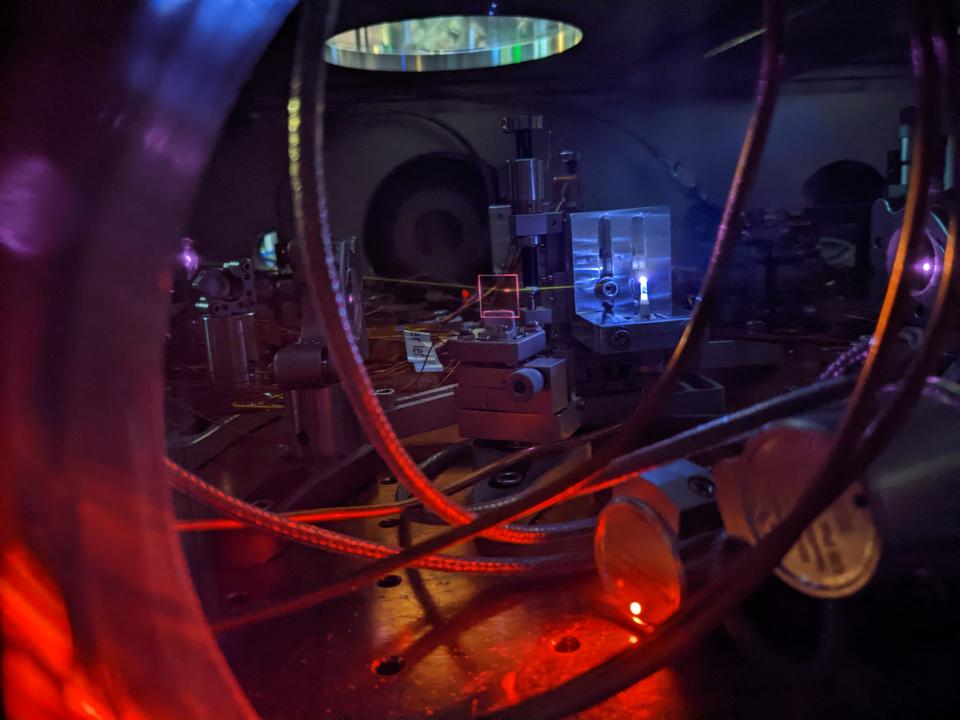

To make these so-called optical clocks, scientists had to overcome several daunting challenges. First, they needed to find atoms with high-energy electron transitions corresponding to optical frequencies that could be controlled with lasers. Early optical clocks used hydrogen, calcium and mercury atoms, but aluminum, strontium and ytterbium have since taken center stage.

Scientists also had to improve their lasers. Lasers emit narrow beams of monochromatic, coherent light, unlike the multicolored, incoherent light that comes from, say, a light bulb or the Sun. Lasers were invented in the 1950s, and you’ve probably used them to scan groceries, amuse cats and do many other things. But to manipulate and probe the inner workings of atoms, physicists needed lasers that were more precise and stable than any that had come before.

Even the world’s best lasers weren’t enough. Physicists also needed a way to count visible light wave oscillations with extreme accuracy — something not possible with conventional electronics. That breakthrough came in 1999, when John Hall at JILA, a joint institute of NIST and the University of Colorado, and Theodor Hänsch at the Max Planck Institute for Quantum Optics in Garching, Germany, demonstrated the first frequency combs. Frequency combs are essentially rulers for light that can translate visible light into microwave signals that electronics can measure.

In 2006, NIST researchers used the frequency comb to build the first optical atomic clock to outperform the best cesium clock in accuracy. The clock was based on a single mercury ion. (An ion is an electrically charged atom.)

But aluminum ions ultimately proved less sensitive to thermal effects. In 2010 NIST’s quantum logic clock, based on a single aluminum ion, outperformed the mercury ion clock’s accuracy by about a factor of two, reaching an uncertainty that would correspond to neither losing nor gaining a second in three billion years.

Since then, scientists at NIST and elsewhere have lowered the uncertainties on aluminum-based optical clocks by another factor of 10. If one had been running since the Big Bang, 13.8 billion years ago, it would have neither gained nor lost even one second.

A second, radically different, optical clock design emerged from the lab of Hidetoshi Katori, a physicist at the University of Tokyo. In the early 2000s, Katori invented a way to trap thousands of ultracold atoms in matrices of laser light — think of eggs resting in an egg carton. Because lattice clocks allow scientists to probe thousands of atoms at once, they can perform precision measurements much faster than single-ion clocks. This allows scientists to validate the clock performance over shorter time periods and perform precision studies of the clock frequencies.

Since Katori and colleagues invented the optical lattice clock in the mid-2000s, scientists in several countries, including Japan, Germany and the U.S., have built versions based on different atoms. Ytterbium and strontium are currently the most popular.

Similar to the best ion clocks, the best lattice clocks would not have gained or lost a second had they started running at the Big Bang. In 2024, a strontium optical lattice clock at JILA set new records for accuracy and precision. Its ticks of time are so pristine that it would gain or lose less than one second in 30 billion years.

And after two and a half decades, optical clocks are starting to break out of the lab. A company based in California announced in late 2023 that it was selling a portable optical clock based on iodine molecules. Another company is developing a second design, building on work published several years ago by scientists at NIST. In 2024, a Japanese company announced it was selling the first commercial optical lattice clock, based largely on Katori’s design.

Meanwhile, Katori has taken a portable optical lattice clock to the top of the tallest building in Japan to measure the effects of Einstein’s general relativity, which predicts that clocks tick slightly faster in lower gravity. NIST scientists are planning a similar experiment using a mountain in Colorado, which could provide the strongest test to date of general relativity’s equivalence principle.

Yet even the mind-bogglingly accurate optical clock will likely not have the last word on time. Physicists are already eyeing a new type of clock that could yet again revolutionize timekeeping.

Into the Nucleus

In 2003, physicists at the Physikalisch-Technische Bundesanstalt, Germany’s metrology institute, proposed a clock that, instead of tapping an energy transition of electrons, would use one inside the atomic nucleus. Most nuclear transitions are far too high in energy to measure with lasers. But a certain isotope of the element thorium, thorium-229, has an unusually low-energy transition in the ultraviolet part of the electromagnetic spectrum.

A clock built on such a frequency could chop time more finely than any optical clock. Better yet, the atomic nucleus is far better shielded from environmental disturbances than are electrons. That has given physicists hope that a nuclear clock, if it is ever built, could almost automatically become the most stable clock ever invented.

Recently, physicists cleared a major hurdle on the path toward building a nuclear clock. In 2024, three research groups, including one at JILA, reported that they had used lasers to excite quantum jumps within the thorium nucleus. Previously, the energy of the jumps had only been measured indirectly. The new results allow physicists to focus their efforts on developing lasers that can measure the thorium nuclear transition frequency with enough precision to advance beyond existing clocks.

Still, a functional and useful nuclear clock is years away, in part because ultraviolet lasers are far behind their optical counterparts in terms of technology development and sophistication. But physicists are a restless bunch, and several research groups are working toward a new generation of clocks to rule them all.

A Timeless Quest

Allen Astin, the director of NIST from 1951 to 1969, spoke in 1953 of “the romance of precision measurement.” Present-day NIST physicist Stephan Schlamminger has mused that this romance emerges from the fact that just as love is a quest to grow close to another person, measurement science seeks to come as close as possible to the true quantities set by the laws of the universe.

Atomic clocks are among the best measurement devices ever created. They have brought humans exquisitely close to producing pure, perfect ticks of time. But perfection remains forever a quest, not a destination. And the atomic clock is still young.

In the coming decades, we will see a new second and, for the first time, a global timekeeping system based solely on atoms. We will see optical atomic clocks launched into space, perhaps replacing the less accurate clocks on GPS satellites, perhaps paving the way for human exploration of our solar system and beyond. Atomic clocks may work their way into our personal devices; you and I may someday walk around with atomic clocks in our pockets and on our wrists.

In all likelihood, the vast majority of the atomic clock’s story remains to be written.