PerfLoc Frequently Asked Questions (FAQs)

PERFLOC NAVIGATION

- PerfLoc: Performance Evaluation of Smartphone Indoor Localization Apps

- User Guide

- FAQs

- Data

- Archived Announcements

- Back to Location-Based Services portfolio page

Post-Competition note

Admittedly, there is less support for PerfLoc today than the level that existed when the Competition was running during 2017-2018. That is, NIST will try to answer any questions you may have about PerfLoc, but we will not be in a position to answer all questions sent to us. Fortunately, the depth and breadth of documentation on PerfLoc is significant. Hence, future users of PerfLoc data may find the answers to their questions in the available documentation.

FAQs

Q: How can I learn more about the PerfLoc Prize Competition?

A: We held a webinar on May 18th, 2017, 1:00PM - 2:30PM, EDT (UTC-4:00). You can find more information about the webinar here. If we don't answer a question you have, email PerfLoc [at] nist.gov (PerfLoc[at]nist[dot]gov) and we will make sure you get an answer.

Q: Where can I find the slides for the PerfLoc Webinar held on May 18, 2017?

A: The slides for the PerfLoc Webinar can be found here.

Q: Where can I find the record/node/1835276ed video for the PerfLoc Webinar held on May 18, 2017?

A: The video for the PerfLoc Webinar can be found here.

Q: Why did NIST use .pbs files?

A: According to Google, protocol buffers are simpler, smaller, faster, etc. than XML for serializing structured data: https://developers.google.com/protocol-buffers/docs/overview#whynotxml.

Q: How can I read the .pbs files?

A: We suggest that you read the Google official documentation on protocol buffers at https://developers.google.com/protocol-buffers/docs/proto. You will find a lot of useful information in the above page, including instructions for and examples of reading such files using various computer languages. If you still have problems with this, please do not hesitate to write to us.

Q: How many Wi-Fi access points exist in the four buildings where the PerfLoc data was collected?

A: Recall that Building 1 has no access points. We have provided information about 96 access points. These are in Buildings 2-4 or one other building that was a neighbor of Building 2. (The Wi-Fi signal from the neighboring building can be heard in Building 2.) If you plot the locations of the 96 access points on the foot prints of different buildings, you can figure out how many are in each building.

Q: Did NIST calibrate the magnetometers in the smartphones before data collection?

A: Yes. In fact, we calibrated all four phones before each data collection scenario. We did this by tilting and rotating the phones using the procedures that have appeared in a number of web sites and the manufacturers' documentation until we obtained the highest possible level (3) for calibration.

Q: Can I use the scans made during the five seconds following a request to the app for a location estimate to improve the localization accuracy of the app?

A: Yes, as long as you produce a location estimate within five seconds to meet the latency requirements of the apps. However, you need to consider that there can be a tradeoff between accuracy and latency. If your app takes five seconds to produce a location estimate, you may have moved away by ~7 meters at normal walking speeds from where you asked for a location estimate.

Q: Can I use the data from multiple phones to estimate the locations of dots?

A: In principle, there is no way for NIST to prevent you from doing so. However, if you are selected as a finalist and invited to the NIST campus for live tests of the app you have developed, your app would have to work with whatever data it measures in real-time. It will not have access to data from other phones. Therefore, it may be wiser to develop an app that uses whatever data is available on the given phone.

Q: Can I just develop an indoor localization algorithm and not an app?

A: In order to win any prizes, you need to have an Android app. If you do very well during the over-the-web testing of your location estimates and you are selected as a finalist, NIST will ask you if you have an app before inviting you to the NIST campus for the Finalists App Demo Days at NIST.

Q: Will NIST use one of the four buildings in which it collected the PerrfLoc data for the live tests during the Finalists App Demo Days?

A: No, we will use a new building.

Q: Will there be any training data for the building that will be used for the Finalists App Demo Days?

A: No. However, some information about the building will be made available to the finalists, such as the MAC addresses and 3D coordinates of the Wi-Fi access points and the coordinates of the corners of the building.

Q: Would it be possible for NIST to make available some fingerprinting data?

A: We are already providing some sparse fingerprinting data for each of the four buildings through the training data sets we have posted. Each training data set includes ground truth locations at a number of timestamps. We have also made available Wi-Fi scans within one second of each of those time instances. Therefore, you have a few "Wi-Fi fingerprints" in each building (except for Building 1 that does not have Wi-Fi access points) that you can use for location estimation for the test data sets in the same building.

We felt that collecting sufficiently dense Wi-Fi fingerprints in our four buildings with 30,000 square meters of space would have been a huge amount of work. Therefore, we did not collect any Wi-Fi or magnetic fingerprints, and we have no plans to do so in the context of the PerfLoc Prize Competition. It is not realistic to assume that such data is available in a building in which a smartphone indoor localization app is going to be used. In addition, Wi-Fi fingerprint data tend to become stale over time.

Q: Why am I getting a score that based on my overall SE95 is inconsistent with the formula given in PerfLoc Competition Rules?

A: Thank you for pointing out this discrepancy. We have revised the formula in PerfLoc Competition Rules so that it is now consistent with the way the score is computed by our website.

Q: I am getting smaller SE95 values for each of the four buildings than the corresponding values obtained by another participant. Yet, my overall SE95 performance (and hence score) is exactly the same as the other participant's overall SE95. How can this happen?

A: The fact that you got exactly the same overall SE95 is shear coincidence. It is easy to show the following:

min{ SE95 for Building i,i=1,2,3,4}≤Overall SE95≤max{SE95 for Building i,i=1,2,3,4}

Therefore, the overall SE95 can take values in a potentially wide range. In fact, you can have smaller SE95 in individual buildings and yet end up with a larger overall SE95 than another participant! We show this through an example.

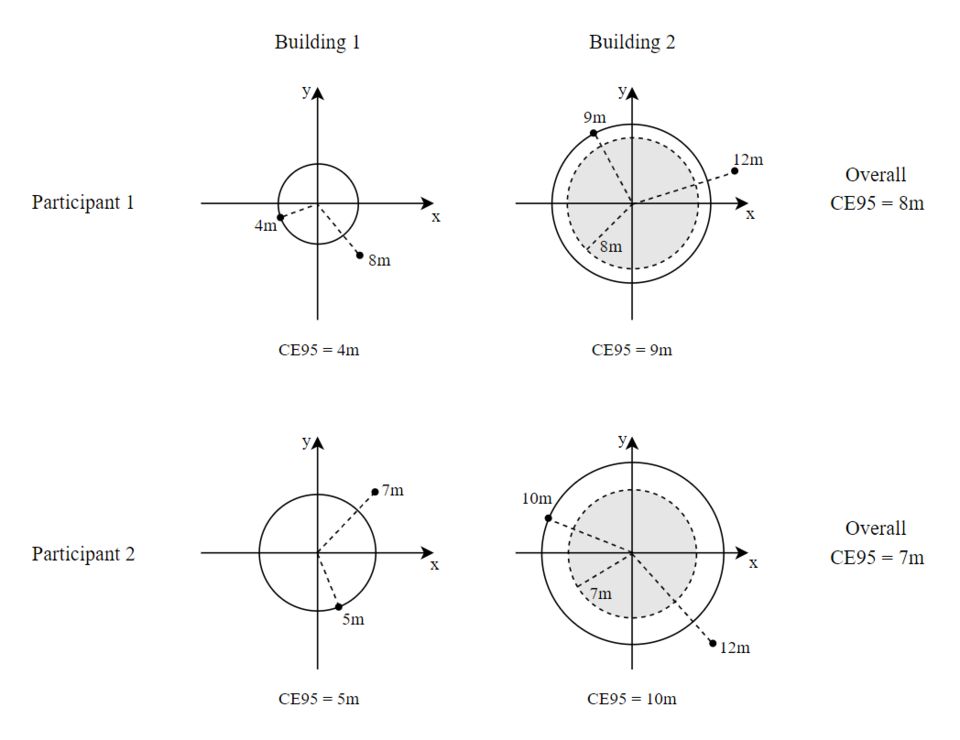

Suppose you have two buildings with 20 test points in each. For the sake of simplicity, so that we can draw circles instead of spheres, we are going to look at the CE95 (circular error 95%) performance metric. Below figures show why Participant 1 ended up with a larger overall CE95 value than Participant 2, even though Participant 1 had smaller CE95 values in both buildings than those obtained by Participant 2.

For Participant 1 in Building 2, 18 of the error vectors are inside or on the perimeter of the shaded region, one is on the perimeter of the Building 2's CE95 circle with radius 9 m, and one is 12 m away from the origin.

For Participant 2 in Building 2, 18 of the error vectors are inside or on the perimeter of the shaded region, one is on the perimeter of the Building 2's CE95 circle with radius 10 m, and one is 12 m away from the origin.

Q: Six modes of mobility have been described in the PerfLoc User Guide. Can I expect multiple modes in a single dataset/scenario for both test and training datasets?

A: It depends! The training scenario for each building uses the second mode of mobility only. That is, "walking continuously and without any pause throughout the course". The test scenarios use all six mobility modes. The third mode of mobility reads "running / walking backwards / sidestepping / crawling part of the course". Then you have normal walking for the rest of the course.

We did not want to specify which mode of mobility is in which dataset and where in the dataset, because your app should ideally detect any change in the mobility mode automatically. It does not make sense to have an app that you have to tell it that you are going to run now.

Therefore, it is best to have a mobility mode detector as part of an app that is constantly analyzing the sensor data to detect transition from one mode of mobility to another. This would be like a finite state machine. Once the correct mobility mode has been detected, then there has to be appropriate changes in the way that you process the sensor data and fuse it with RF data.

Q: Will we be allowed to calibrate the NIST smartphone that will be used during the Finalist App Demo Days (e.g. perform some figure eights for the magnetometer, or leave it flat on the ground to calibrate gyros, etc.)?

A: Yes, a few minutes will be given to each team to calibrate the phone.

Q: Will there be indoor / outdoor transitions, for example getting a GPS fix before going inside - sort of how we have initial position estimates for the test datasets?

A: Given that the GPS fixes can have significant error, each scenario will start at a dot with known location and the 3D coordinates of that dot will be given to each team. We may still have excursions to outside and then going back into the same building, but this has not been finalized.

Q: How should the results be reported from the app to the evaluators? Will the phones be connected to the internet for the whole time?

A: We plan to have a NIST app that will be on the NIST-provided google Pixel XL smartphone. Your app will also be installed on that smartphone. Whenever we are on a dot during a test scenario, you will tap on a button on the NIST app that will ask your app for a location estimate. The NIST app will write the location estimate received from your app and the time it took for your app to provide that location estimate in a file on the smartphone that will be used for performance evaluation.

We have not decided whether the smartphone will be allowed to connect to the Internet, because your app may use a location service in the cloud, such as Google Map. There is a tradeoff here.

Q: Are there any details about what to expect in terms of number of courses, durations, mobility types, etc.?

A: Not yet. All the testing should be completed in the first day. If we could use multiple phones, we could test multiple apps simultaneously, but differences in the phones may put some apps under test in disadvantage.

The number of scenarios (courses) will also depend on how many teams will be invited to the NIST Campus. We'd like to use scenarios that are about the same length as the test scenarios included in the PerfLoc data, i.e., about 20 minutes. It is unlikely that we will include all modes of mobility in the scenarios.

Q: Can NIST make more training data available for larger buildings?

A: We do not intend to make more training data available. This was a judgment call and there were tradeoffs involved in this decision. Had we made ground truth locations available at a larger number of points in our training sequences, we would have had less points available for testing, because the total number of points/dots whose locations we surveyed was fixed. However, please see the following two questions.

Q: The training points with ground truth location are far apart. How did you decide on the separation between training points?

A: We wanted to use only 10% of our surveyed dots for training purposes and leave the other 90% for testing. We had two choices. One was to collect training data in ~ 10% of the area of a building and provide the ground truth locations of all the dots in that area. This would have resulted in training dots with the same density / separation as in the test dots. The other possibility was to provide training data in the entire building, but make the training dots far apart. We chose the latter, because we knew you would not be able to fine tune your algorithms to cope with IMU drift if we gave you data collected in a small area of the building.

Q: We do not have enough training data with ground truth annotation for different modes of mobility. Can NIST collect such data and make it available to the PerfLoc community?

A: Thank you for this request; it is under consideration. If we determine it is feasible (and appropriate) we will notify all participants.

Q: Why did NIST not make the floor plans of the buildings in which it collected data available to the PerfLoc community?

A: There were two reasons. First, at least today, it is not realistic to assume the floor plans of a building in which a smartphone indoor localization app is going to be used is going to be available to the smartphone. Maybe, this will change for buildings open to the general public in the future, but it is not the case today. Also note that it is a challenge to keep floor plans up to date.

Second, there are other indoor localization competitions in which floor plans are made available to the participants. NIST decided to be different and fill a void.

Q: Why did NIST use SE95 as the performance metric for PerfLoc?

A: Other indoor localization competitions use the average localization error as the performance metric, with one adding a penalty term whenever you make an incorrect guess of the floor on which the localization device is. For PerfLoc, we wanted to provide instantaneous feedback on how well your algorithms are doing. It is easy to show that when such feedback on average localization error is available, one can develop an algorithm that computes the ground truth locations of our dots. We also took the additional measure of limiting the number of location estimate uploads each participant can do to 150.

We realize that working with SE95 can be heart-wrenching! (See another question about SE95 in this page.) You could make significant improvements by lowering average localization error and yet not see any improvements in SE95 as long as the error vectors on the surface of the SE95 sphere are not "pulled in". You could even see an increase in SE95! On the positive side, the guarantees that SE95 provides are valuable. It is the performance metric used by many in the PNT (Positioning, Navigation, and Timing) community as well as by FCC in the E911 context.

To provide some relief for this situation, we are going to make available SE50 figures in addition to SE95. SE50 is the median of the localization error and it is close to the mean for many distributions. At least, you may see improvements in SE50, if your SE95 performance is not getting smaller. SE95, however, will remain as the performance metric used in PerfLoc to assess the effectiveness of your algorithms/apps.

Q: Can I assume that the path/course taken in a building during a test scenario is a subset of the path/course for the training sequence in the same building?

A: No. Of course, parts of the course in each test sequence may be found in the course for the training sequence, but one is not a strict subset of the other. Certain level of overlap is expected, because each building has a finite number of corridors and stairwells.

Q: Can I upload location estimates more than three times per day now that the end of the testing period is fast approaching?

A: That is a good suggestion, even though it may be a moot point with the extension we just made to the PerfLoc timeline. We will write to PerfLoc participants shortly regarding the changes necessary as a result of the extension.

Q: Do I get disqualified for a cash award if I cannot go to the IPIN Conference?

A: NIST is not sending anyone to IPIN as a result of the extension we made to the PerfLoc timeline. NIST would like to send up to three winners to the 2018 Microsoft Indoor Localization Competition in April 2018. You will not be disqualified for a cash award if you cannot go to that competition, but you would disqualify if you cannot attend the Finalist App Demo Days at NIST. To receive a cash award you must meet the eligibility criteria.

Q: Will NIST make more information available about the NIST app that will interface with a PerfLoc app and will be used during the live tests at the Finalist App Demo Days?

A: Certainly. We intend to make that app available to the PerfLoc participants or at least clearly specify its interfaces so that the participants know how to prepare themselves for the live tests and what plugs to put in their apps so that the apps can talk to the NIST app.

Q: Will NIST provide training data for the building in which the live tests of the Finalist App Demo Days take place? How will that event look like?

A: NIST does not plan to provide training data for that building. We have provided a fair amount of data for you to develop your algorithms/apps. (We know that some believe we could have provided more.) The expectation is that once you have developed your app, it should be ready for use in any building under the PerfLoc operating assumptions, namely, the starting position, starting direction of motion, MAC addresses and locations of Wi-Fi access points, building footprint, etc. will be made available to the app.

NIST will make sufficient information available to make it possible for a bona fide PerfLoc app to function during the live tests. Please stay tuned.

Q: How will NIST deal with a situation where the performance of the app that a finalist submits for the live tests at NIST is not as good as the algorithm the same participant used for the offline evaluation phase of the competition?

A: NIST will not be in a position to make such a determination. The app will be tested in a building other than the four buildings that were used in the offline phase. Therefore, it is not easy to compare the performance numbers.

A participant may develop an algorithm that qualifies him/her as a finalist, but he/she may realize later that changes have to be made in the algorithm in order to make it run as an app in real-time on the Google Pixel XL smartphone. However, 20 points are at stake at the Finalist App Demo Days. The way things are progressing, those 20 points are equally important as your offline performance. Hence, a participant has to do his/her best to submit an app with high performance to increase the chances of winning the top prize.

Q: What can you tell me about the initial heading in each scenario?

A: It was the direction along which we walked for some distance. If we said we walked toward South, that means we walked in that direction for at least a couple of meters, sometimes more. The walk into the building was not always along a straight line. Therefore, the initial heading applies to the first few seconds of our walk only.

Any questions? Please email PerfLoc [at] nist.gov (Subject: Question) (PerfLoc[at]nist[dot]gov).