AI RMF Development

In collaboration with the private and public sectors, NIST has developed a framework to better manage risks to individuals, organizations, and society associated with artificial intelligence (AI). The NIST AI Risk Management Framework (AI RMF) is intended for voluntary use and to improve the ability to incorporate trustworthiness considerations into the design, development, use, and evaluation of AI products, services, and systems.

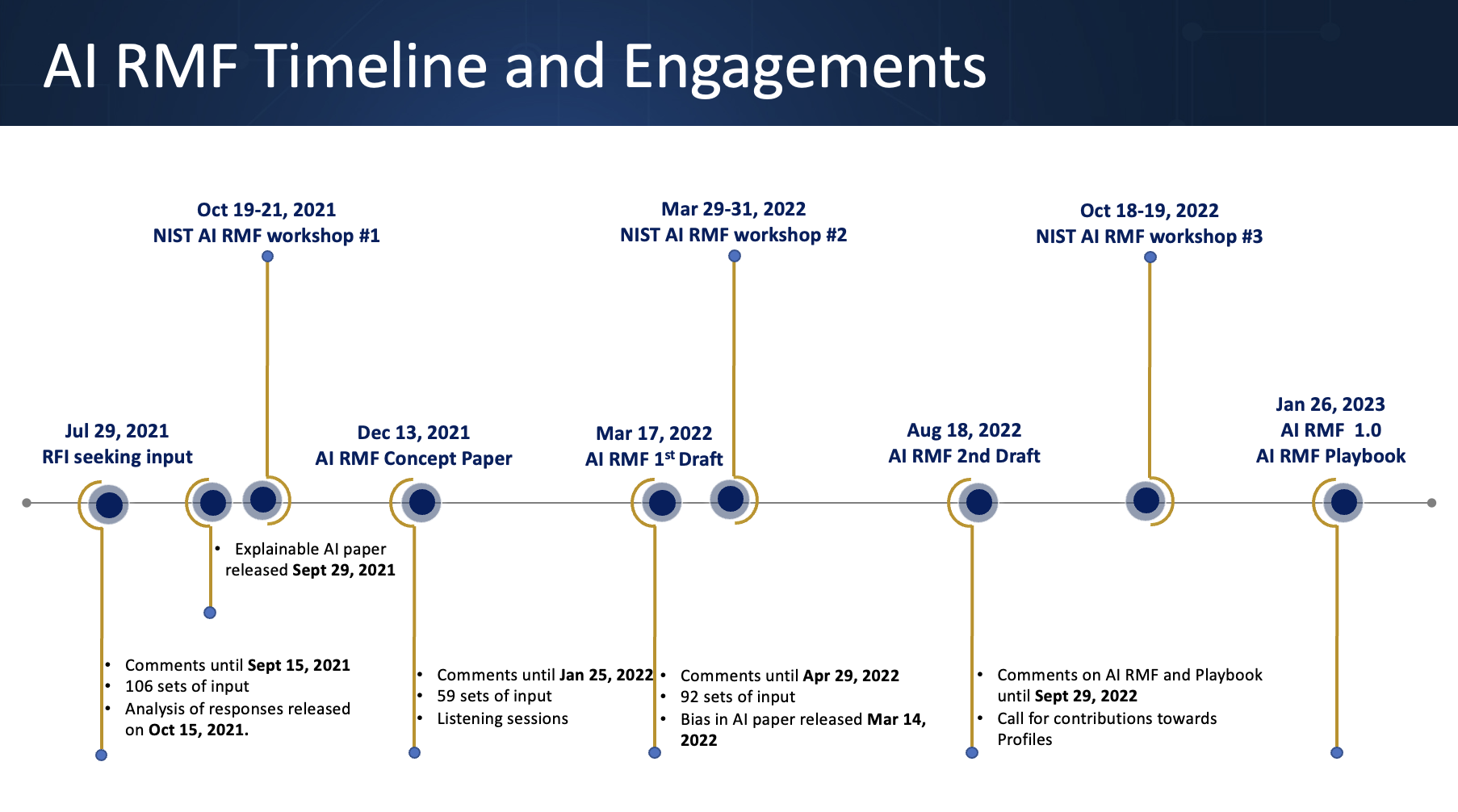

Released on January 26, 2023, the Framework was developed through a consensus-driven, open, transparent, and collaborative process that included a Request for Information, several draft versions for public comments, multiple workshops, and other opportunities to provide input. It is intended to build on, align with, and support AI risk management efforts by others.

A companion NIST AI RMF Playbook also has been published by NIST.

NIST released the first complete version of the NIST AI RMF Playbook on March 30, 2023

NIST released the AI Risk Management Framework (AI RMF) 1.0. along with a companion NIST AI RMF Playbook, NIST AI RMF Video Explainer, an AI RMF Roadmap, AI RMF Crosswalks, and various Perspectives on January 26, 2023.

NIST issued a second draft of the AI RMF for written comments and at the October 18-19, 2022, workshop.

NIST issued a first draft of the AI RMF for written comments and at the March 29-31, 2022, workshop.

NIST issued a Concept Paper of the AI RMF for public review and comment on Dec 13, 2021.

NIST prepared a brief summary of responses to the July 29, 2021 RFI in advance of the workshop held on October 19-21, 2021.