Ampere: History

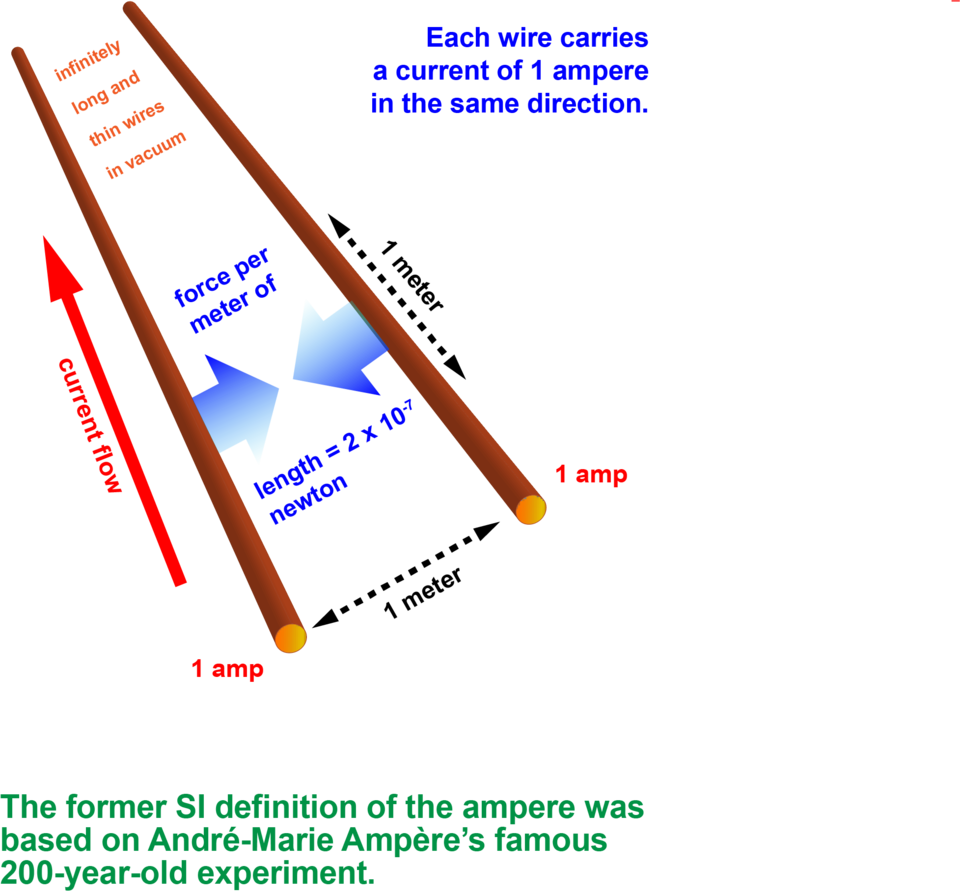

A French mathematician and physicist named André-Marie Ampère was inspired by this demonstration to work out the connection between electricity and magnetism. He found that if you put two wires parallel to each other and pass current through them, the wires will either be attracted to each other or repulsed depending on whether the currents flow in the same or opposite directions. This is because each wire generates a magnetic field. The longer the wires, and the higher the currents passing through them, the greater the magnetic repulsion or attraction.

Until 2019, the SI definition followed this arrangement. If it were set up under ideal conditions with the wires exactly 1 meter apart, a current of 1 ampere would result in a force between the wires of 2 X 10-7 newtons. That’s not much — roughly a ten-millionth of the weight of an average apple. But it’s there.

Throughout the century, people continued to work out the rules governing electromagnetism (EM). In 1861, scientists began proposing systems of units for EM. These systems included units for current, voltage and resistance. However, different scientists used different unit systems. At one point, there were four different systems of EM units in use simultaneously. Scientists wanted to make a system of units that everyone could share.

Choosing the Ampere

In 1893, a scientific committee called the International Electrical Congress (IEC) met in Chicago and settled on two units to be the basis for the others: the ohm for resistance and the ampere for current. The Congress’ decision was formally accepted at the International Conference of scientists who met in London in 1908.

The ohm was one of the first units to be created for electricity. It is arguably one of the easiest electrical quantities to imagine, since wires of different lengths, for example, can carry different amounts of resistance. But realizing the ohm standard was a circuitous process, from the 1860 “Siemens mercury unit” denoted by electricity passing through a 1-meter long column of pure mercury to the use of various electrical components such as coils, inductors and capacitors, and finally in the 1990s, to phenomena based on quantum mechanics.

This ampere ― called the “international ampere” ― was not the ampere that scientists use today. Instead, this ampere was realized ― transformed from definition into practical reality ― with a device called a silver voltameter. This device contained electrodes with positive (anode) and negative (cathode) terminals. The anode was suspended in a solution of silver nitrate. When current passed through the device, silver would accumulate on the cathode. Researchers would then determine the mass of the cathode before and after; the amount of silver on the cathode indicated how much current had passed through the device.

The ampere was defined as the current that would deposit precisely 0.001118 grams of silver per second from a silver nitrate solution. More accurate measurements later revealed that this current is actually less than the 1 ampere that scientists thought they were measuring.

However, this realization of the ampere was not an absolute measurement. Scientists still had to calibrate the silver voltameter using other instruments. One of them was a voltage standard called a Weston cell, an H-shaped glass container filled with carefully layered beds of chemicals.

Weston cells were famous for their accuracy as well as their reliability: They could produce the same voltage over a long period of time. Voltage, resistance and current are all related. So, researchers could use a Weston cell with a resistor of known resistance to create a current that could be used to calibrate the silver voltameter.

Once the silver voltameter was calibrated, it could be used as a primary standard for calibrating another kind of instrument commonly used for current-meter calibrations. This device was called an ampere balance, a precursor to the Kibble balance that’s now used as an “electronic kilogram” for measuring mass.

The idea of the ampere balance was that technicians passed a current through coils, which produced a physical motion that moved an indicator on a mechanical scale. The position of the indicator on the scale told them the amount of current flowing through the coils.

Finding a Better Ampere

In 1921, the General Conference on Weights and Measures (CGPM) ― an international organization that makes decisions about standards ― formally added the ampere as a unit of electricity, making it the fourth SI unit. The ampere joined the SI units for distance, time and mass, which had been incorporated since the time of the 1875 Treaty of the Meter. But scientists were already finding that the silver voltameter-based definition of the unit for current was no longer accurate enough.

Even beforehand, scientists had grumbled about the London conference’s decision to define the ampere in terms of the silver voltameter. Back in 1917, E.B. Rosa and G.W. Vinal, two scientists at the National Bureau of Standards (NIST’s predecessor), wrote in the Proceedings of the National Academy of Sciences:

At the time of this conference it was the opinion of the delegates from this country that the volt should have been chosen in place of the ampere, because the standard cell was more reproducible than the silver voltameter and was the means then as now actually employed (in conjunction with the ohm) for measuring the ampere by the drop in potential method. The decision of the conference was, however, accepted as final, and researches were undertaken in several different countries, and particularly in this country, with the aim of making the voltameter worthy to bear the responsibility imposed upon it by the London conference.

By 1933, the CGPM was determined to move from this “international” ampere based on the silver voltameter to one based on the so-called absolute system, which used the more fundamental units of the centimeter, gram and second.

In 1935, the International Committee on Weights and Measures (CIPM), which made recommendations that would be reviewed by the CGPM, unanimously approved this suggestion.

After a hiatus during World War II, the international community of scientists took up the problem again. In 1948, the CIPM officially adopted the new definition of the ampere — related to the force per unit length between two long wires. This harks back to the original experiment conducted by Ampere himself—and involves the fundamental units for length, mass and time. Scientists realized this unit using known resistors and Weston cells to provide a stable resistance and voltage.

In 1960, the ampere, along with six other fundamental units of measurement, were integrated into the SI, still the basis of measurement science today.