Kelvin: History

The kelvin is the fundamental unit of temperature. But it came at the end of a journey that began long before thermometers even existed.

The earliest attempts at gauging temperature used no fixed scale and no degrees. These proto-thermometers — which we now call thermoscopes — could be used only for comparing one temperature to another, or monitoring temperature changes, and not measuring an exact individual temperature.

About 2,000 years ago, the ancient Greek engineer Philo of Byzantium came up with what may be the earliest design for a thermometer: a hollow sphere filled with air and water, connected by tube to an open-air pitcher. The idea was that air inside the sphere would expand or contract as it was heated or cooled, pushing or pulling water into the tube. In the second century A.D., the Greek-born Roman physician Galen created and may have used a thermometer-like device with a crude 9-degree scale, comprising four degrees of hot, four degrees of cold, and a “neutral” temperature in the middle. However, the markings on this scale didn’t correspond to actual temperature amounts, but just different levels of “hotter” or “colder.”

It wasn’t until the early 1600s that thermometry began to come into its own. The famous Italian astronomer and physicist Galileo, or possibly his friend the physician Santorio, likely came up with an improved thermoscope around 1593: An inverted glass tube placed in a bowl full of water or wine. Santorio apparently used a device like this to test whether his patients had fevers. Shortly after the turn of the 17th century, English physician Robert Fludd also experimented with open-air wine thermometers.

In the mid-1600s, Ferdinand II, the Grand Duke of Tuscany, may have realized that the results for these open-air thermoscopes were affected not just by temperature but also by air pressure. He designed a version of a thermoscope in which wine was contained within the glass tube that was sealed off by melting the glass at its base.

Despite these improvements, there were still no “scales.” Each device had its own unique gradation. Renaissance manufacturers would often set the highest and lowest markings on a thermoscope to their reading on the hottest and coldest days that year.

Battle of the Scales

The first recorded instance of anyone thinking to create a universal scale for thermoscopes was in the early 1700s. In fact, two people had this idea at about the same time. One was a Danish astronomer named Ole Christensen Rømer, who had the idea to select two reference points—the boiling point of water and the freezing point of a saltwater mixture, both of which were relatively easy to recreate in different labs—and then divide the space between those two points into 60 evenly spaced degrees. The other was England’s revolutionary physicist and mathematician Isaac Newton, who announced his own temperature scale, in which 0 was the freezing point of water and 12 was the temperature of a healthy human body, the same year that Rømer did. (Newton likely developed this admittedly limited scale to help himself determine the boiling points of metals, whose temperatures would be far higher than 12 degrees.)

The invention of Rømer’s and Newton’s scales turned their thermoscopes into the world’s first bona fide thermometers, and interest in thermometry research exploded.

After a visit to Rømer in Copenhagen, the Dutch-Polish physicist Daniel Fahrenheit was apparently inspired to create his own scale, which he unveiled in 1724. His scale was more fine-grained than Rømer’s, with about four times the number of degrees between water’s boiling and freezing points. Fahrenheit is also credited as the first to use mercury inside his thermometers instead of wine or water. Though we are now fully aware of its toxic properties, mercury is an excellent liquid for indicating changes in temperature.

Originally, Fahrenheit set 0 degrees as the freezing point of a solution of salt water and 96 as the temperature of the human body. But the fixed points were changed so that they would be easier to recreate in different laboratories, with the freezing point of water set at 32 degrees and its boiling point becoming 212 degrees at sea level and standard atmospheric pressure.

Fahrenheit’s scale became hugely popular. It was the primary temperature standard in English-speaking countries until the 1960s and is still favored in a handful of countries including the United States.

But this was far from the end of the development of important temperature scales. In the 1730s, two French scientists, Rene Antoine Ferchault de Réamur and Joseph-Nicolas Delisle, each invented their own scales. Réamur’s set the freezing point of water at 0 degrees and the boiling point of water at 80 degrees, convenient for meteorological use, while Delisle chose to set his scale “backwards,” with water’s boiling point at 0 degrees and 150 degrees (added later by a colleague) as water’s freezing point.

A decade later, Swedish astronomer Anders Celsius created his eponymous scale, with water’s freezing and boiling points separated by 100 degrees—though, like Delisle, he also originally set them “backwards,” with the boiling point at 0 degrees and the ice point at 100. (The points were swapped after his death.)

With so many thermometry proposals floating around, there was confusion. Different papers used different scales, and frequent conversions were necessary. Into this mess stepped physicists who sought to create a scale based on the fundamental physics of temperature.

Absolute Temperature

Tied to the idea of the fundamental physics of temperature is the idea that there is a minimum temperature that is theoretically possible to achieve — absolute zero, or, as some put it at the time, “infinite cold.”

Even as early as the 1700s, there were inklings of the concept of an absolute zero. French physicist Guillaume Amontons did some of the earliest work while studying what he perceived as the springiness of air. He noticed that when a gas is cooled down, it pushes back on a liquid with less force than when it is warm. He reasoned that perhaps there was a temperature so low that air would lose all its springiness and that this would represent a physical limit to cold.

During the next 100 years, physicists including Amontons, Swiss-born J.H. Lambert, and French chemist and physicist Joseph Louis Gay-Lussac did extrapolations that set this absolute zero anywhere from -240 to -273 degrees Celsius.

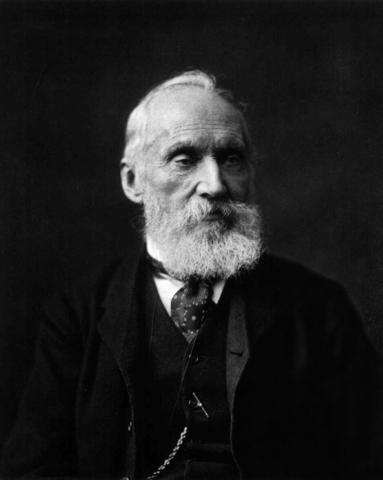

Then, in the middle of the 19th century, British physicist William Lord Kelvin also became interested in the idea of “infinite cold” and made attempts to calculate it. In 1848, he published a paper, On an Absolute Thermometric Scale, that stated that this absolute zero was, in fact, -273 degrees Celsius. (It is now set at -273.15 degrees Celsius.)

The scale that bears his name uses increments with the same magnitude as the Celsius scale’s degrees. But instead of setting 0 arbitrarily at the freezing point of water, the Kelvin scale sets 0 at the coldest point possible for matter.

The kelvin has been the preferred scale for scientists since 1954, when the General Conference on Weights and Measures (CGPM) — an international organization that makes decisions about measurement standards — adopted the kelvin (K) as the base unit for thermodynamic temperature. The CGPM set a reference point of 273.16 K as the triple point of water, at which water exists as a solid, liquid and gas simultaneously in thermal equilibrium.

Since then, the CGPM has set several other reference points to improve thermometer calibration.

For more information on the kelvin, see BIPM’s SI Brochure: The International System of Units (SI).